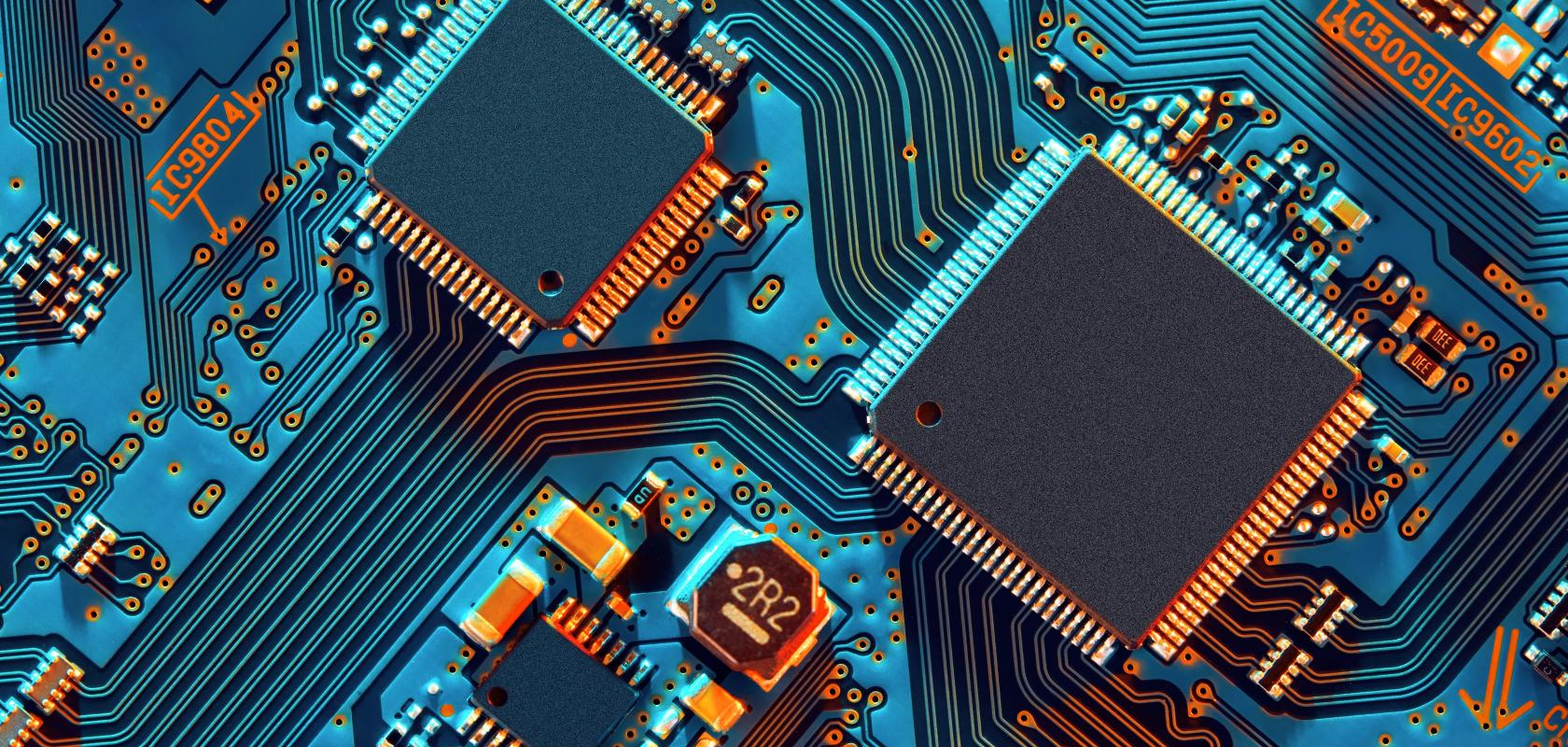

The latest memory and processors for HPC in 2023

A round-up of the latest processing and memory technologies

Register for FREE to keep reading

Join 12,000+ scientists, engineers, and IT professionals driving innovation through informatics, HPC, and simulation with:

- Insights into HPC, AI, lab informatics & data

- Curated content for life sciences, engineering & academia

- Access to Breakthroughs: real-world computing success

- Free reports & panels, including the Lab Informatics Guide

- White Papers & software updates for smarter research

Sign up now

Already a member? Log in here

Your data is protected under our privacy policy.