With new technologies on the horizon and increasingly competitive products available to the HPC community there is an increased choice in the HPC processor market. Increasing diversity is providing realistic alternatives out there for HPC and AI users who want to explore the use of new technologies.

In the processor space, we now have Intel, IBM, AMD and Arm all vying for HPC business. Tachyum also recently announced it had joined the Open Compute Project targetting datacentre, AI and HPC workloads.

GPUs are also seeing increased competition – with Intel, AMD and Nvidia all aiming to compete in this market for accelerated computing in HPC and AI/ML. There are also increasing numbers of more specialised processor choices available for AI and ML applications from companies such as Graphcore.

AMD is the most successful of the challengers today and has already demonstrated its competitiveness with the current generation of Intel technologies. AMD’s EPYC processor architecture has already stolen headlines from Intel over the last 12 months. Now that AMD has the second generation of its EPYC silicon - denoted as ‘Rome’ - it is expected to capture market share from Intel.

The Rome chips deliver impressive price performance, and this is likely to help fuel a surge of systems in both the US and Europe with some large contracts already announced at the end of 2019. For example, the DOE system Frontier will make use of AMD technologies; likewise, the UK’s Archer2 will be based on the Epyc processors.

Arm HPC development

Arm and Fujitsu have spent more than the last five years developing its A64FX processor, which will be used in the upcoming RIKEN supercomputer. Arm has made improvements to its architecture from an HPC standpoint, the largest change being the Scalable Vector Extension (SVE). The co-development with Fujitsu is now starting to bear fruit, as the RIKEN Post K computer – which is reported to be in the region of 400 petaflops - will demonstrate the viability of this processor at scale.

Toshiyuki Shimizu, senior director for the Platform Development Unit at Fujitsu, comments on the decision to select Arm to develop the processor technology for HPC: ‘The Arm instruction set architecture (ISA) is a well-maintained open standard which is accessible and usable by anyone. It also has a rich ecosystem for embedded, IoT and mobile systems.’

‘The Armv8-A has suitable security capabilities even for mission-critical applications. We, as a lead partner in this collaboration, enhanced the Arm architecture with the ISA extension, known as scalable vector extension (SVE), to optimise Arm for high-performance computing,’ stated Shimizu.

‘Arm developed the ISA extension known as SVE, scalable vector extension, for high-performance servers. Fujitsu worked with Arm as a lead partner to develop it, the SVE has the capability to support 2048 bit SIMD and A64FX uses 512 bit. The SVE is also good for AI applications,’ Shimizu added. It is clear that this extension, while useful for HPC, is not only designed with Fugaku in mind but also future supercomputer development.

The Previous RIKEN systems used the RISC architecture, which has some shared history with the Arm processors we see today. As this is the closest available technology to the previous RISC based systems, the Fujitsu and RIKEN teams have expertise with the architecture.

Shimizu added: ‘The Arm architecture is one of the RISC architectures, and Fujitsu utilised our technologies obtained through our long CPU development history. A64FX, the Fujitsu developed Arm CPU for the Fugaku supercomputer, is based on the microarchitecture of SPARC64 VIIIfx, K computer’s CPU. Memory bandwidth and calculation performance are balanced for application performance compatibility, even though double-precision calculation performance is 20 times greater than the K computer.’

The Fugaku technology co-developed by RIKEN and Fujitsu has already been used in a prototype system that currently sits at the number one position in the latest Green500 list, which measures the energy efficiency of HPC systems. Shimizu commented that the technology underpinning this system includes optimised macros and logic design and usage of SRAM for low power consumption: ‘The Silicon technology, which uses a 7nm FinFET and direct water cooling, is also very effective.’

Shimizu also discussed the integration of the interconnect on the chip as being another innovation developed by Fujitsu and Riken: ‘High-density integration is not only for the performance but also for power efficiency. High calculation efficiency and performance scalability in the execution of HPL, the benchmark program for TOP500/Green500, also contributed to the result. They are delivered by our optimised mathematics libraries and MPI for the application performance.’

Open Compute

Semiconductor Company Tachyum recently announced its participation as a Community Member in the Open Compute Project (OCP), an open consortium aiming to design and enable the delivery of the most efficient server, storage and datacentre hardware for scalable computing.

Tachyum’s Prodigy Universal Processor chips aim to unlock performance, power efficiency and cost advantages to solve the most complex problems in big data analytics, deep learning, mobile and large-scale computing. By joining OCP, Tachyum is able to contribute its expertise to helping redesign existing technology to efficiently support the growing demands of the modern datacentre.

‘Consortiums like OCP are an ideal venue for pursuing common-ground technical guidelines that ensure interoperability and the advancement of IT infrastructure components,’ said Dr Radoslav Danilak, Tachyum founder and CEO. ‘OCP is being adopted not only by hyperscale, but now also by telecommunication companies, (HPC) customers, companies operating large scale artificial intelligence (AI) systems, and enterprise companies operating a private cloud.

The Open Compute Project Foundation (OCP) was initiated in 2011 with a mission to apply the benefits of open source and collaboration to hardware that can rapidly increase the pace of innovation in the datacentre’s networking equipment, general-purpose and GPU servers, storage devices and appliances, and scalable rack designs.

AI-driven designs

As noted in the energy efficiency feature in this magazine, the growth in AI and ML applications is driving new workloads and applications into the HPC space. Whether replacing traditional HPC workflows with AI or enabling new sets of users with non-traditional HPC applications, HPC users are increasingly looking to AI and ML to enhance their computing capabilities.

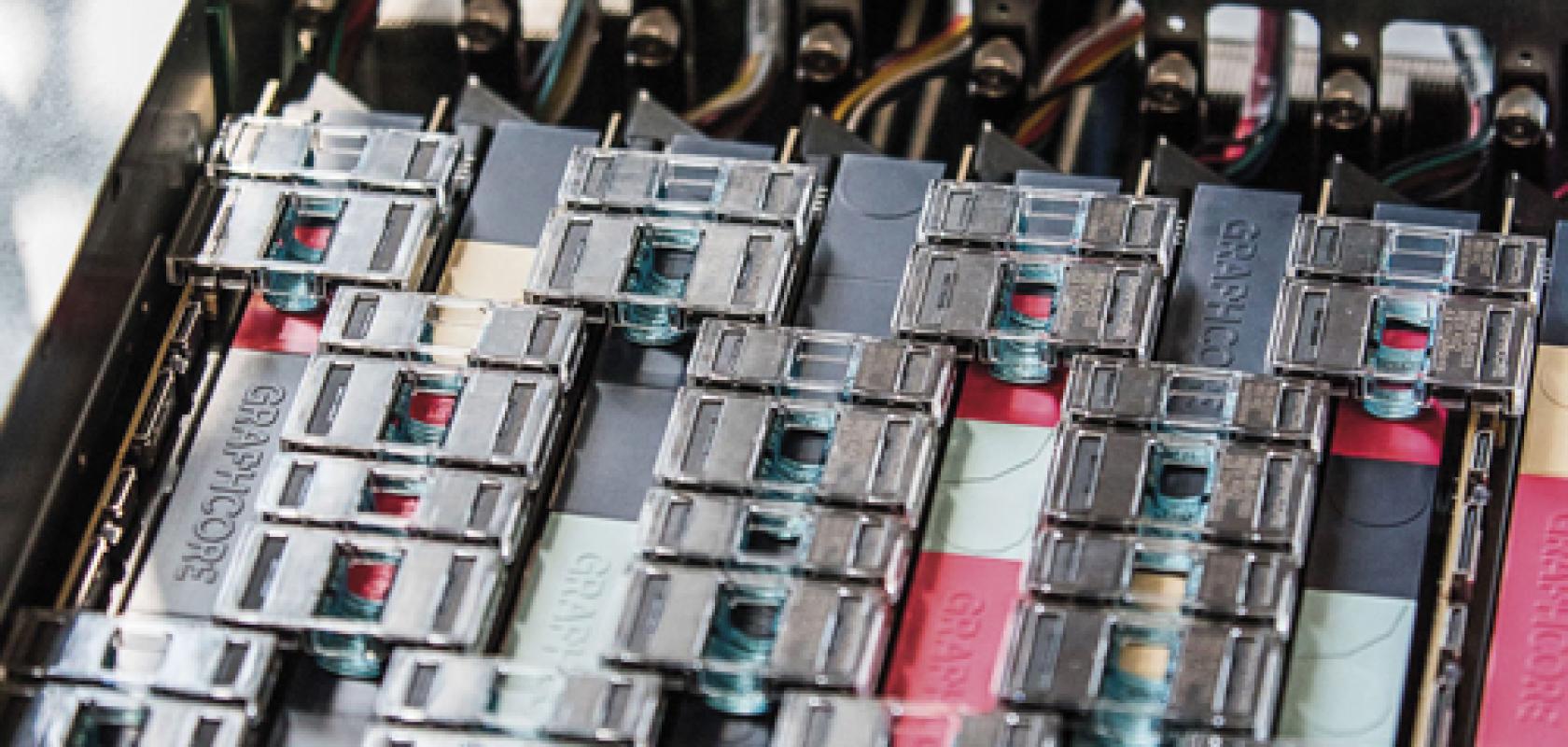

One company previously known in the HPC space for offloading and acceleration of HPC applications, Graphcore, has been developing an AI-focused offload engine for several years and is now at the point of pushing out the technology for real application workloads.

The company has been partnered with Microsoft since 2017. In that time, the Microsoft team, led by Marc Tremblay, distinguished engineer, has been developing systems for Azure and has been enhancing machine vision and natural language processing (NLP) models on The Intelligence Processing Unit (IPU) - the Graphcore parallel processor.

Matt Fyles, VP for software at Graphcore, explains the company’s history and the development of the IPU technology that was originally - albeit many years ago - designed around the acceleration of matrix multiplication workloads that are common in HPC: ‘Graphcore started around this premise that we did not believe there was an existing architecture designed for this purpose. We had all the people from the software and hardware side in Bristol that we have a lot of experience in building these processors, so we could start a new company to build a new product around an architecture specifically for machine learning.’

‘In 2006 I worked at a company called ClearSpeed In Bristol that was a parallel processing company. Alongside Sun Microsystems we built the first accelerated supercomputer. It wasn’t using GPUs; at that point we were using a custom processor architecture.’

‘The key point was that interconnect technology such as PCI-Express had become fast enough that you could offload work from a CPU to another processor without it being such a huge problem. There were also workloads that were basically large linear algebra matrix multiplication workloads, which meant that you could just build a processor that was very good at that, it was the cornerstone of a lot of existing applications in HPC,’ Fyles stated.

The development of an offload engine for HPC applications was the genesis of the Graphcore IPU. While technology has evolved a lot since then it was not until the development of AI and ML on GPUs that the team saw the potential for the Graphcore IPU in these areas. ‘I would say that it did not really move out of HPC and into ML until 2012 when researchers took Nvidia’s CUDA stack and built what would be the first accelerated ML framework. This was done by a research group not directly by Nvidia,’ said Fyles.

‘That enabled a whole new set of applications around ML. There has been a long transition where this technology was not used for ML but it is really still really built around those cornerstones of a very fast interconnect to a host CPU with some form of offload and accelerating matrix multiplication,’ comments Fyles.

‘The technology we are using today is a lot more sophisticated; it is a lot smaller, we can put more compute on a single chip but fundamentally the programming model that we use at the very lowest level is the same as what we began using in 2006. When this whole world of accelerated computing was launched,’ stressed Fyles.

This is a somewhat transitional period for HPC users. There is a changing landscape not only from the perspective of hardware providers but also in the form of changing workloads. The evolving workloads and explosion of AI and ML are driving new hardware and software but also enabling new ways to research and gain insight into science. Capitalising on this growth in users, both traditional HPC users and enterprise customers that need access to scalable AI and ML hardware provides a time of potentially huge growth for companies such as Graphcore.

If smaller companies can develop specialised processing architectures for AI and get them to market in a timely manner, then they could make huge strides in a short time. However, if Graphcore is to replace GPU infrastructure with its IPU technology the company must ease the adoption of this new technology. But, as Fyles notes, this is much easier in AI than it would be in HPC due to the nature of the software frameworks.

‘This is one of the things that is slightly easier in the AI domain than it is in the HPC domain because there are a set of standard frameworks or development environments that get used. In HPC you do not really have that because a lot of it is bespoke but in this space, people write applications that are really abstracted from the hardware. If you can plug into the back of those frameworks, you can accelerate the application they are running,’ notes Fyles.

While the system is specifically optimised for AI and ML workloads, Fyles notes that there is a growing number of HPC workloads that are being replaced or augmented by AI. He gave one example of analysing data from the Large Hadron Collider (LHC). The LHC is using simulation and random number generation, trying to get a prediction of what is happening.

‘Those type of models are being replaced with ML models that have been trained on the real data, but they have been replaced with this probabilistic ML model. Other applications are focused on weather prediction and that is really an image recognition challenge because they feed the model simulated images that come out of the HPC simulations. They train a ML model on it and use that to infer what will happen with specific inputs.

‘There are lots of parts of the standard simulation pipeline that is getting massive speed-up from using these technologies,’ Fyles concluded.