At the recent GTC keynote, Nvidia CEO Jensen Huang announced several new products including two new chips for server-based computing applications. The keynote announcement detailed the release of the company’s new Grace CPU, based on Arm Neoverse chips and designed for AI and HPC applications alongside a new GPU architecture and the Nvidia H100 GPU.

The Grace CPU is Nvidia’s first Arm Neoverse-based discrete data centre CPU. The Nvidia Grace CPU comprises two CPU chips connected, coherently, over NVLink-C2C, a new high-speed, low-latency, chip-to-chip interconnect.

‘A new type of data centre has emerged — AI factories that process and refine mountains of data to produce intelligence,’ said Jensen Huang, founder and CEO of Nvidia. ‘The Grace CPU Superchip offers the highest performance, memory bandwidth and Nvidia software platforms in one chip and will shine as the CPU of the world’s AI infrastructure.’

The Grace CPU Superchip complements Nvidia’s first CPU-GPU integrated module, the Grace Hopper Superchip, announced last year, which is designed to serve giant-scale HPC and AI applications in conjunction with an Nvidia Hopper architecture-based GPU. Both chips share the same underlying CPU architecture, as well as the NVLink-C2C interconnect.

This new chip improves energy efficiency and memory bandwidth with a new memory subsystem consisting of LPDDR5x memory with Error Correction Code for the best balance of speed and power consumption. The LPDDR5x memory subsystem offers double the bandwidth of traditional DDR5 designs at 1 terabyte per second while consuming dramatically less power with the entire CPU including the memory consuming just 500 watts.

The Grace CPU Superchip is based on the latest Arm data centre architecture, Arm v9. Combining the highest single-threaded core performance with support for Arm’s new generation of vector extensions. The chip will run all of Nvidia’s computing software stacks, including Nvidia RTX, Nvidia HPC, Nvidia AI and Omniverse. The Grace CPU Superchip along with Nvidia ConnectX-7 NICs offer the flexibility to be configured into servers as standalone CPU-only systems or as GPU-accelerated servers with one, two, four or eight Hopper-based GPUs, allowing customers to optimise performance for their specific workloads while maintaining a single software stack.

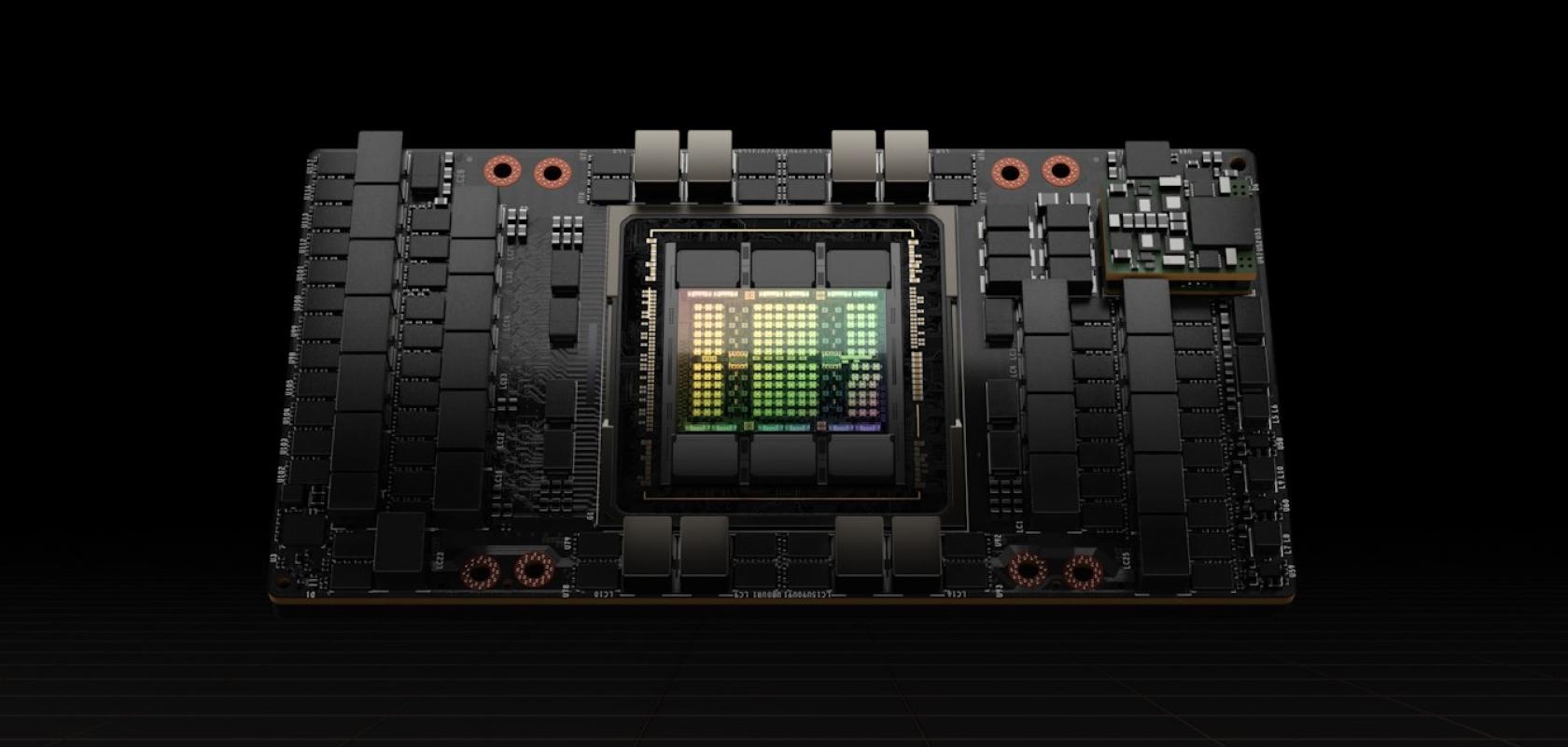

Nvidia H100 GPU

Nvidia also announced its next-generation accelerated computing platform with Nvidia Hopper architecture, delivering an order of magnitude performance leap over its predecessor.

Named after Grace Hopper, a pioneering US computer scientist, the new architecture succeeds the Nvidia Ampere architecture, launched two years ago. The company also announced its first Hopper-based GPU, the Nvidia H100, packed with 80 billion transistors. The H100 includes new features such as a Transformer Engine and a highly scalable Nvidia NVLink interconnect.

"Data centres are becoming AI factories -- processing and refining mountains of data to produce intelligence,’ said Huang. ‘Nvidia H100 is the engine of the world's AI infrastructure that enterprises use to accelerate their AI-driven businesses.’

The H100 features nearly 5 terabytes per second of external connectivity. H100 is the first GPU to support PCIe Gen5 and the first to utilise HBM3, enabling 3TB/s of memory bandwidth. The H100 accelerator's Transformer Engine is built to speed up these networks as much as 6x versus the previous generation without losing accuracy.

H100 will come in SXM and PCIe form factors to support a wide range of server design requirements. A converged accelerator will also be available, pairing an H100 GPU with an Nvidia ConnectX-7 400Gb/s InfiniBand and Ethernet SmartNIC.

Nvidia's H100 SXM will be available in HGX H100 server boards with four- and eight-way configurations for enterprises with applications scaling to multiple GPUs in a server and across multiple servers. HGX H100-based servers deliver the highest application performance for AI training and inference along with data analytics and HPC applications.

The H100 PCIe, with NVLink to connect two GPUs, provides more than 7x the bandwidth of PCIe 5.0, delivering outstanding performance for applications running on mainstream enterprise servers. Its form factor makes it easy to integrate into existing data centre infrastructure.

The H100 CNX, a new converged accelerator, couples an H100 with a ConnectX-7 SmartNIC to provide groundbreaking performance for I/O-intensive applications such as multinode AI training in enterprise data centres and 5G signal processing at the edge.