At the 2018 Nvidia GTC conference the company announced three new products aimed at the datacentre and AI markets.

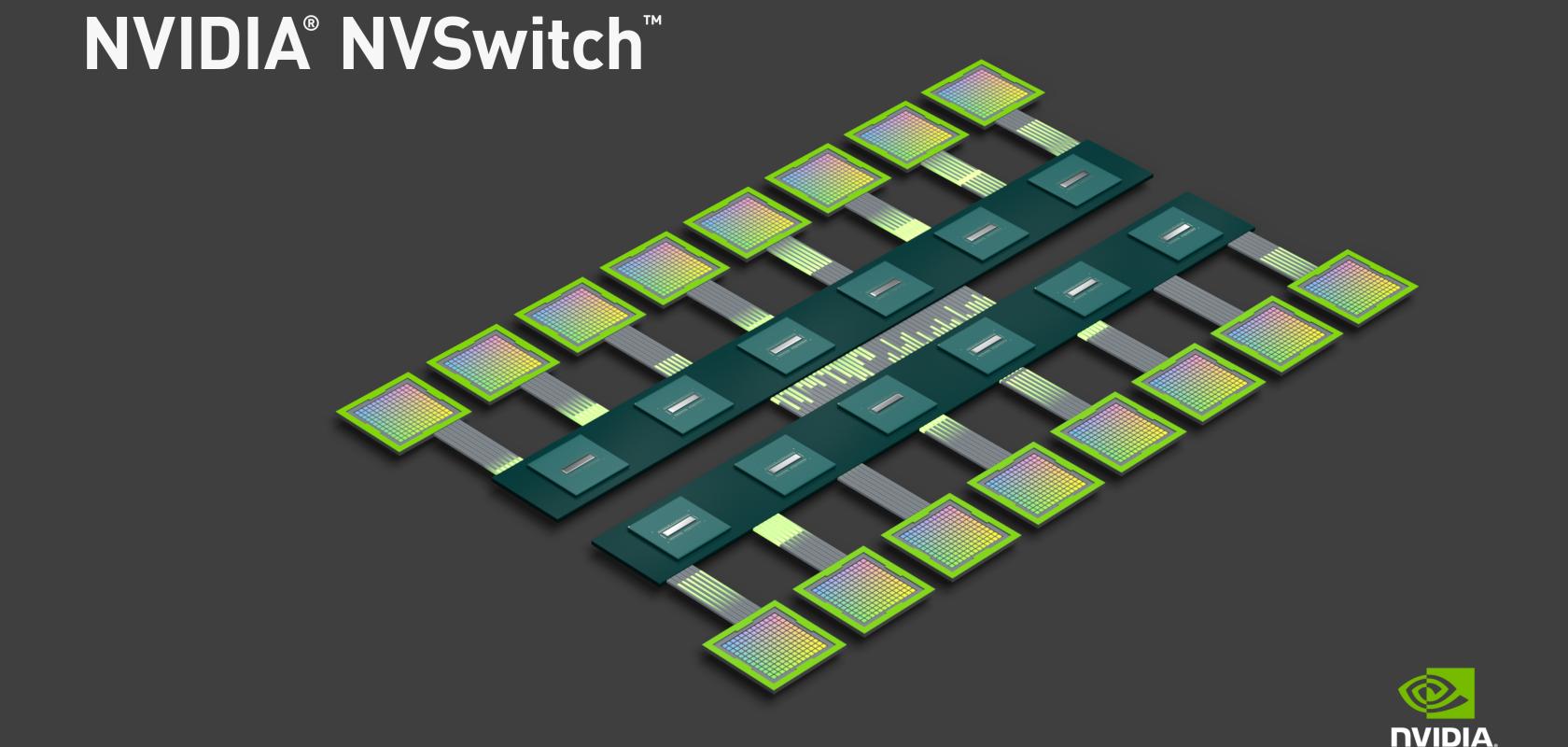

Nvidia announced a 32 GB version of the Nvidia Tesla V100, a 16 port upgrade to NVLink called NVSwitch, and the DGX-2, which uses 16 of the new Tesla V100 GPUs.

Nvidia’s GPU Technology Conference (GTC) took place last week in the US with the company launching several new technologies aimed at increasing AI performance.

As with previous years, the GTC took place in Silicon Valley with Jensun Huang, Nvidia’s CEO, delivering a keynote detailing the company’s latest announcements.

There were several notable announcements as focusing on the development of graphics, medical imaging, autonomy and self-driving technology but there were two important peices if news around AI and datacentre products. A new 32GB NVIDIA Tesla V100 GPU and GPU interconnect fabric called NVIDIA NVSwitch, which enables communication between 16 Tesla V100 GPUs.

Huang noted that there has been huge development in GPU technology over the past 5 years. Comparing the Tesla K20 with today’s high-end devices Huang stressed that GPU development had ‘continued to grow at a faster rate than Moore’s Law’.

Huang said: ‘Clearly the adoption of GPU computing is growing and it’s growing at quite a fast rate. The world needs larger computers because there is so much work to be done in reinventing energy, trying to understand the Earth’s core to predict future disasters, or understanding and simulating weather, or understanding how the HIV virus works.’

In an interview with Scientific Computing World, Ian Buck vice president, Tesla Data Center Business at NVIDIA stated that the announcements centred on AI demonstrate the focus the company has to delivering ‘GPUs with more capabilities and more performance.’

‘AI has been at the forefront of changing the way we interact with devices and companies are transforming their businesses with new AI services’ said Buck.

‘Just five years ago we had the first AlexNet which was the first neural network to become famous for image recognition. Today’s modern neural networks for image recognition - such as Inception-v4 from Google – are up to 350 times larger than the original AlexNet. It is larger because it is more intelligent, more accurate and it can recognise more objects’ added Buck.

Nvidia announced two key hardware announcements alongside a host of other improvements such as TensorRT 4 and advancements to its DRIVE system. Key advancements to the NVIDIA platform – which Huang stated had been adopted by every major cloud service provider and server maker, were the new GPU and GPU fabric NVSwitch.

Buck explained that the Tesla V100 GPU ‘will deliver the same performance in terms of floating point performance, same number of Tesla cores, mechanicals and form factor. We simply have more memory now and that memory allows us to train some of those larger and more complicated neural networks.’

‘We will still offer the 16 GB but we will now also offer the 32GB version. Because we kept the form factor identical it has been very easy for our channel partners to accept this product and add it to their product line and they will be making their own announcements this week at GTC’ he added.

‘Today’s high end AI systems incorporate 8 of our Tesla V100 GPUs connected with NVLink through a hybrid cube mesh topology. Can we go higher? Can we add more GPUs to provide our AI community with even more powerful GPUs for training?’ stated Buck.

‘One of the challenges to that is how do we scale up NVLink? When we first introduced NVLink it was a point-point high speed interconnect offering 300 GB/s bandwidth per GPU but it was point-point – we directly connected all of the GPUs together in what we called a hybrid cube mesh topology.

‘To go further we need to expand the capabilities of our NVLink fabric. We have invented a new product which we call the NVSwitch. This enables a fully switched device for building an NVLink fabric allowing us to put up to 18 NVLink supports with 50Gb/s per port giving a grand total of 900 Gb/s of bandwidth in this fully connected internal crossbar which is actually a 2 billion transistor switch.

The NVSwitch fabric can enable up to 16 Tesla V100 GPUs to communicate at a speed of 2.4 terabytes per second. Huang declared that ‘the world wants a gigantic GPU, not a big one, a gigantic one, not a huge one, a gigantic one.

Combining these new technologies into a single platform gives you Huang’s next announcement the NvidiaDGX-2. Huang explained to the crowd at the conference keynote that the DGX-2 is the first single server capable of delivering two petaflops of computational power.

DGX-2 has the deep learning processing power of 300 approximately servers occupying 15 racks of datacentre space, while being 60 times smaller and, the company claimed, up to 18 times more power efficient.

This new server packs the power of 16 of the world’s most advanced GPUs to accelerate new AI model types that were previously unmanageable with previous systems. It also enables unprecedented GPU scalability, so users can train bigger models on a single node with as much as 10 times the performance of an 8-GPU system.