As the market for artificial intelligence matures, it is helping to drive accelerated growth in computing technologies to support highly parallel workloads, artificial intelligence (AI) and machine learning (ML). This new paradigm in computing is opening up the benefits of GPU or accelerated computing to a broader audience – far beyond the traditional users of supercomputing.

The growth in AI and machine learning has been dramatic. In April this year, market research firm IDC predicted that western European revenues for cognitive and AI systems would reach $1.5 billion in 2017.

IDC predicts this rise will continue in the coming years as the company forecasts a growth rate of 42.5 per cent through 2020 when revenues will exceed $4.3 billion.

Much of this growth comes from comes from three key industries which were early adopters of AI and cognitive systems – banking, retail, and discrete manufacturing, although the IDC report does note that cross-industry applications have the largest share across all industries.

The report states that by 2020 these industries – including cross-industry applications – will account for almost half of all IT spending on cognitive and artificial intelligence systems.

‘IDC is seeing huge interest in cognitive applications and AI across Europe right now, from different industry sectors, healthcare, and government,’ said Philip Carnelley, research director for Enterprise Software at IDC Europe, and leader of IDC’s European AI Practice.

‘Although only a minority of European organisations have deployed AI solutions today, a large majority are either planning to deploy or evaluating its potential. They are looking at use cases with clear ROI, such as predictive maintenance, fraud prevention, customer service, and sales recommendation,’ Carnelley added.

The report also notes that from a technology perspective, the most significant area of spending in western Europe in 2017 will be cognitive applications at approximately $516 million. This includes ‘cognitively-enabled’ process and industry applications that automatically learn, discover, and make recommendations or predictions.

‘Cognitive Computing is coming, and we expect it to embed itself across all industries. However, early adopters are those tightly regulated industries that need robust decision support: finance, specifically banking and securities investment services, is one of these early adopters,’ said Mike Glennon, associate vice president for customer insights and analysis at IDC.

‘However, the cost savings to be found in automating decision support in a structured environment, together with the enhanced ability to identify previously hidden aspects of behaviour, ensure the distribution and services and public sectors embrace cognitive computing and artificial intelligence systems – where it can offer the dual benefits of lowering cost, and growing new business. We also expect strong growth in adoption in manufacturing in w estern Europe, at the core of industry across the region,’ Glennon added.

This interest is reflected in a large growth in the number of applications that are finding their way into use in both industry, academia. AI applications are being run on appliances and small servers all the way up to the largest supercomputers. This is true of even the leadership class HPC facilities which are pursuing research into machine learning and AI research applications.

Developing AI technology

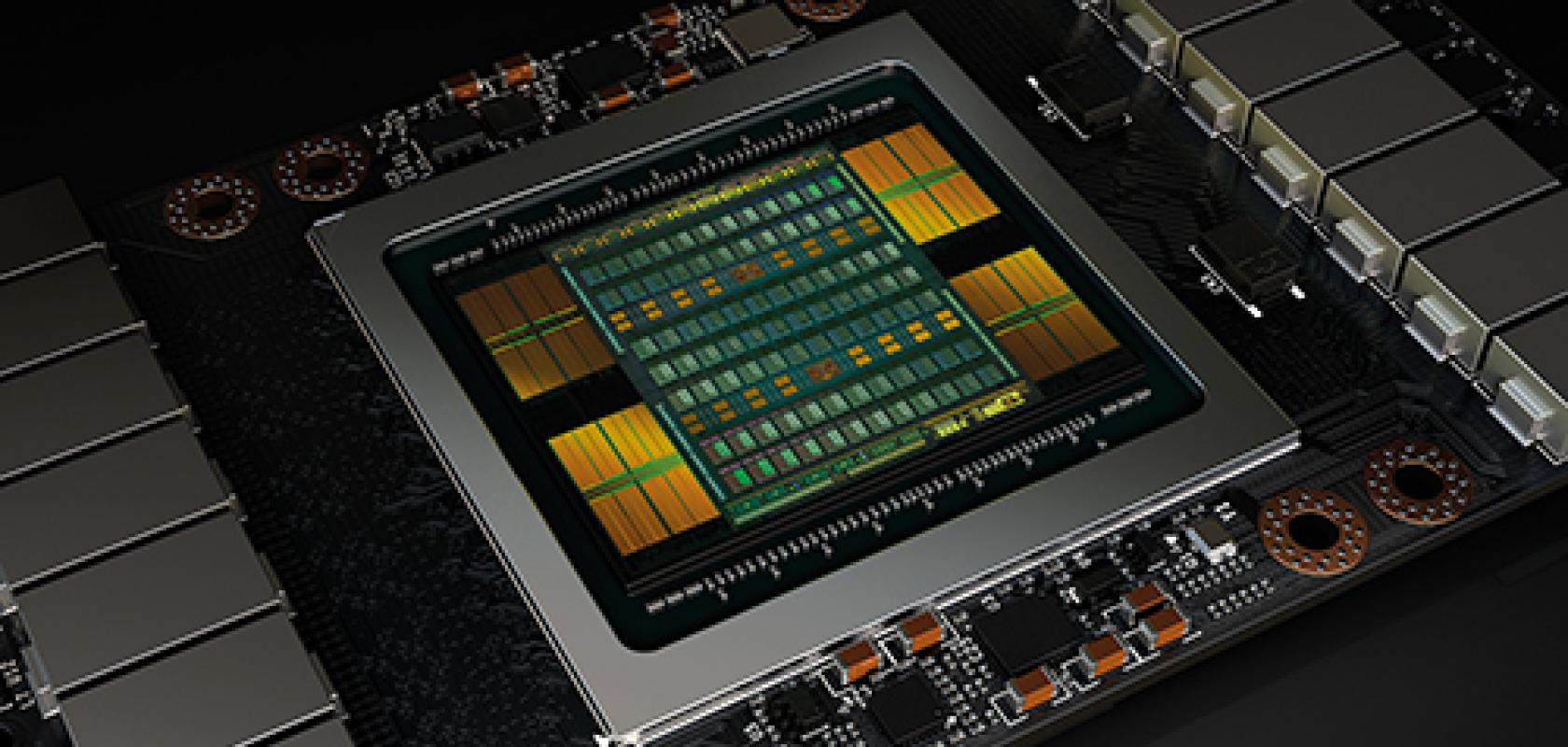

Nvidia and Intel are the primary hardware providers for AI and ML applications, but several other companies have released processing technologies aimed at AI and ML applications. These range from Nvidia GPU’s to Intel coprocessors or even FPGA technology.

While the DL and ML algorithms have been around for some time, it is the arrival of incredibly parallel computing technologies such as GPUs that have enabled the explosion in ML/DL applications. The falling price of these GPUs and other accelerators alongside increasing computational performance that is well suited to highly parallel applications have enabled accelerator technology to flourish in this new market.

This year has seen Intel and Nvidia release new hardware, tools and investment aimed at capturing the AI market.

Nvidia initially launched is DGX-1 appliance in 2016. This was updated in 2017 to deliver higher performance by adding the Volta GPUs to the DGX-1 system. The first the first examples of the Volta-based DGX-1 were delivered to supercomputing sites in September 2017.

But it is not just hardware that is the ground for stiff competition. The development of algorithms and faster application frameworks is another highly competitive area.

At Nvidia’s GTC event in China, held at the end of September 2017, Nvidia CEO, Jensen Huang, delivered a keynote that focused more on software development and application performance than new GPU hardware.

There was one hardware tease of a new processor to aid in developing autonomous vehicles, known as ‘Xavier’, but this will not be unveiled until 2018.

Most of the keynote was focused on the development of new software NVIDIA AI computing platform, TensorRT 3, the AI Cities platform, and Nvidia DRIVE PX.

TensorRT 3 is Nvidia’s AI inferencing software, which has been designed to boost the performance and slash the cost of inferencing from the cloud to edge devices. This new software could be used in applications from self-driving cars to robots.

During the keynote, Huang claimed that using TensorRT 3 and Nvidia’s latest GPUs can process up to 5,700 images a second.

Nvidia’s DRIVE platform has been designed as a platform for future autonomous vehicles and Nvidia has stated that users can adopt some or the entire platform. The systems is based on a scalable architecture that is available in a variety of configurations. These range from a single mobile processor, to a multi-chip configuration with two mobile processors and two discrete GPUs, or a combination of multiple DRIVE PX systems used in parallel.

The new processor Xavier will provide an added boost to this platform in 2018. Early access partners will be able to receive platform containing the Xavier SoC early next year, while general availability is expected at the end of 2018.

‘Our vision is to enable every researcher everywhere to enable AI for the goodness of mankind,’ said Nvidia CEO Jensen Huang. ‘We believe we now have the fundamental pillars in place to invent the next era of artificial intelligence, the era of autonomous machines.’

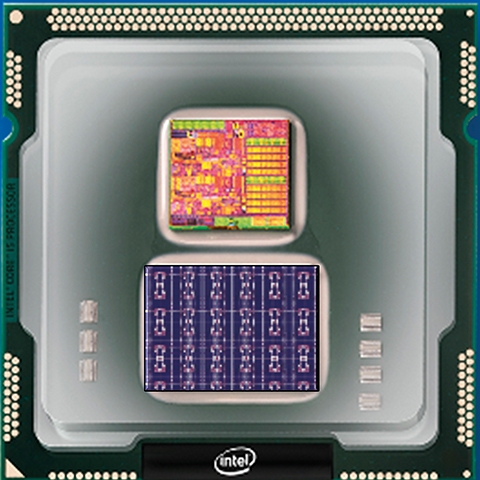

Intel has also launched products aimed at deep learning such as its first neuromorphic, self-learning computer chip codenamed Loihi. Intel released information about the chip as part of a blog post at the end of September authored by Dr Michael Mayberry, corporate vice president and managing director of Intel Labs.

The blog post notes that the work on neuromorphic computing builds on decades of research that started with CalTech professor, Carver Mead, who was known for his work in semiconductor design. The combination of chip expertise, physics and biology yielded some revolutionary ideas: comparing machines with the human brain.

Loihi, Intel's first self-learning neuromorphic computer chip

As part of this research Intel developed a self-learning neuromorphic chip – codenamed Loihi – that mimics how the brain functions by learning to operate based on various modes of feedback from the environment. Intel claims that this is an extremely energy-efficient chip, which uses the data to learn and make inferences, gets smarter over time and does not need to be trained in the traditional way. ‘We believe AI is in its infancy and more architectures and methods – like Loihi – will continue emerging that raise the bar for AI.

‘Neuromorphic computing draws inspiration from our current understanding of the brain’s architecture and its associated computations,’ wrote Mayberry.

‘The brain’s neural networks relay information with pulses or spikes, modulate the synaptic strengths or weight of the interconnections based on timing of these spikes, and store these changes locally at the interconnections. Intelligent behaviours emerge from the cooperative and competitive interactions between multiple regions within the brain’s neural networks and its environment,’ Mayberry added.

The Blog post from Intel also notes that ML models such as deep learning have made tremendous recent advancements – particularly in areas such as training neural networks to perform pattern or image recognition. ‘However, unless their training sets have specifically accounted for a particular element, situation or circumstance, these ML systems do not generalise well,’ stated Mayberry.

The Intel blog post noted that the self-learning capabilities prototyped by this new chip from Intel have the potential to improve automotive and industrial applications as well as personal robotic. Any application that would benefit from autonomous operation and continuous learning – such as recognising the movement of a car or bike – is a potential application for the Loihi chip.

In the first half of 2018, the Loihi test chip will be shared with leading university and research institutions with a focus on advancing AI.

In addition to new chips, Intel has also committed $1 billion to AI research in recent months. Brian Matthew Krzanich, chief executive officer of Intel, stated: ‘We are deeply committed to unlocking the promise of AI: conducting research on neuromorphic computing, exploring new architectures and learning paradigms. We have also invested in startups like Mighty AI, Data Robot and Lumiata through our Intel Capital portfolio and have invested more than $1 billion in companies that are helping to advance artificial intelligence.’

‘AI solutions require a wide range of power and performance to meet application needs. To support the sheer breadth of future AI workloads, businesses will need unmatched flexibility and infrastructure optimisation so that both highly specialised and general purpose AI functions can run alongside other critical business workloads,’ Krzanich concluded.