While there are many options available to traditional HPC users, the growing artificial intelligence (AI) market is reliant on highly parallel accelerator technologies that can speed up the training of neural networks used in deep learning (DL) – an implementation of machine learning (ML).

While there is a lot of excitement around the AI market already, it may be some time before this can reach critical mass. Many of these technologies must be tested, sampled and understood before they can begin to see widespread adoption.

One company that has been involved in the development and deployment of GPU-based servers for both the HPC and AI markets is Gigabyte. The company sells a wide variety of server products, from ARM and OpenPOWER systems to more traditional HPC server products such as CPU/GPU-based servers.

This gives Gigabyte a fairly comprehensive view of the markets as it is not reliant on a single product or technology succeeding.

Akira Hoshino, product planning manager for Gigabyte’s Network and Communications Business Unit, explains that the company’s history is much longer than some would expect having spent almost twenty years designing and supplying server products for the HPC market.

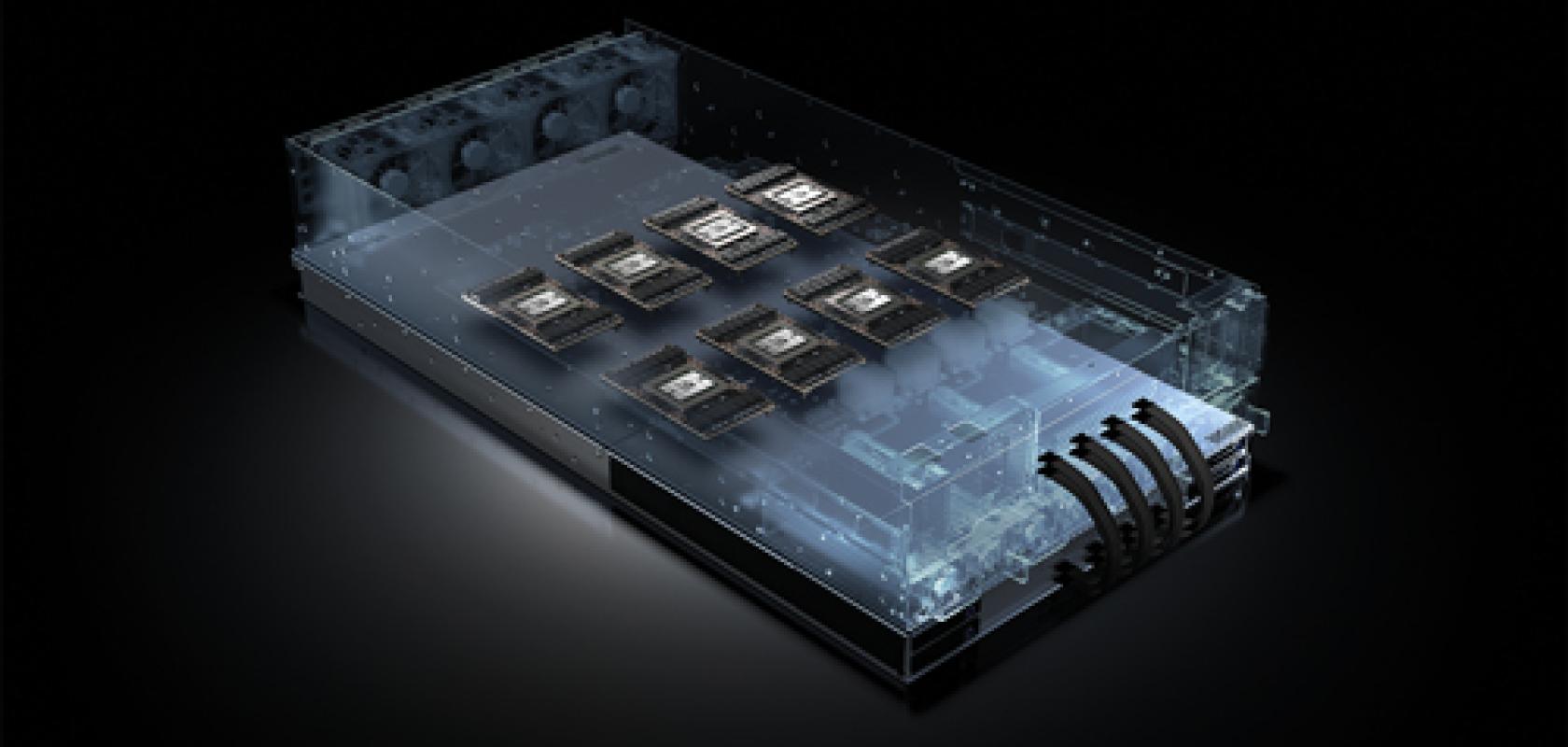

‘We have a long history in developing HPC servers, especially GPU-based servers,’ stated Hoshino. ‘We have a 2U system called the G250. This 2U form factor server system holds eight GPU cards – it is a very high density.’

‘In our competitor’s product line-up we have not found any other servers that have a higher density so we can say confidently that we have the highest density 2U GPU server in the world today,’ said Hoshino.

Adapting to change

Stuart Coyle, marketing manager, Network and Communications Business Unit, at Gigabyte, added: ‘As a business, we have been making servers and server motherboards for around 15-20 years now. We do have a big range of more standard type servers in addition to the GPU servers we are discussing today.’

Hoshino went on to highlight the company’s work in developing high density server products for the HPC market. ‘In the beginning, we focused on channel business. For example, we have experience providing servers to Google which we shipped right at the beginning of Google’s history.’

Hoshino explained that as the business developed over time the company chose to move towards a business more focused on ODM/OEM sales, but around three years ago the company began to move to supply both ODM/OEM and channel business. Now the company ships branded products but also white box solutions that companies can rebrand as their own.

This experience and hardware agnostic approach have helped Gigabyte develop a sense of the HPC market, and now the company believes we are seeing a shift as more and more companies take up emerging applications such as AI.

This interest will help to drive many new companies to purchase HPC hardware so they can explore the use of AI within their own business. While this is likely to take at least another 12 months, Gigabyte saw this change and decided to adapt to meet the requirements of this new AI revolution.

Hoshino commented that while there is a lot of interest in AI technology the sales have not yet caught up to the aspirations of the market. He explained that it would likely be around 12 months before the AI business really takes off.

Hoshino explained that these two markets will be very different based on the different technologies and different platforms such as Intel with its Xeon CPU and Xeon Phi coprocessors.

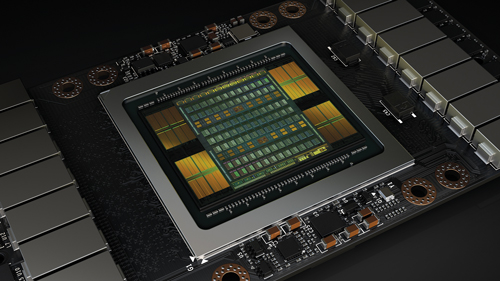

The same can be said for Nvidia which is developing its own AI specific technologies, such as the recently announced Volta V100 GPU which is designed for AI and machine learning, whereas the Tesla K40 and K80 are products focused towards the traditional HPC market.

‘The Traditional HPC market and AI market will become separate entities,’ stated Hoshino. He explained that while they may share the same hardware, the way it is utilised, the libraries and software and also the user community can be very different to the traditional HPC market.

‘More and more big companies put a lot of resources in this [AI] market. Currently, it is just a trend, but so far we have not seen real demand from customers,’ said Hoshino.

Accelerating the AI revolution

Nvidia has been leading the charge for GPU development for a number of years. While there is stiff competition from companies such as Intel, with their Xeon Phi coprocessors, and now also from FPGA developers such as Xilinx, the majority of GPUs used in HPC today come from Nvidia.

2017 is no different and this year at Nvidia’s GPU Technology Conference (GTC) Nvidia unveiled plans for its next big GPU the Volta V100, designed specifically to take advantage of the burgeoning AI and ML markets.

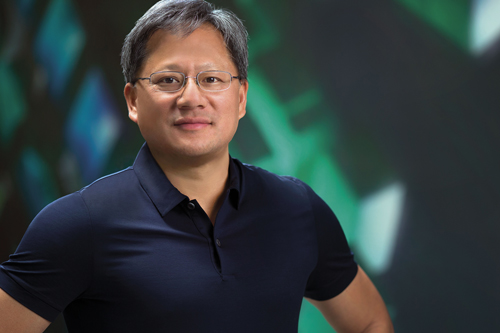

Over the course of the two-hour presentation at the GTC in Silicon Valley, Nvidia CEO Jensen Huang announced details of new Volta-based AI supercomputers including a powerful new version of its DGX-1 deep learning appliance. He also discussed the Nvidia GPU Cloud platform that gives developers access to the latest, optimised deep learning frameworks, and unveiled a partnership with Toyota to help build a new generation of autonomous vehicles.

Jensen Huang, CEO of Nvidia

However, it was the announcement about this new GPU that stole the show. Built from 21 billion transistors, Huang claimed that the Volta V100 delivers deep learning performance equal to 100 CPUs.

Huang went on to explain that this new GPU provides a five times improvement in peak teraflops over the previous architecture Pascal, and 15 times over the Maxwell architecture, launched two years ago.

As Huang explained during his presentation interest in AI is at an all-time high and Nvidia are hoping that it can capitalise on this demand for AI computing hardware

‘Artificial intelligence is driving the greatest technology advances in human history,’ said Huang.

‘It will automate intelligence and spur a wave of social progress unmatched since the industrial revolution. Deep learning, a ground breaking AI approach that creates computer software that learns, has an insatiable demand for processing power. Thousands of Nvidia engineers spent over three years crafting Volta to help meet this need, enabling the industry to realise AI’s life-changing potential,’ he commented.

This interest is reflected in Huang’s claims that investment in AI start-ups has increased to $5 billion in 2016 and the number of AI programs at Udacity, an online university focused on computing and coding, has grown to 20,000 in two years.

Nvidia Tesla V100 data centre GPU

Keeping pace with Moore’s law

While the siren like call of the potential for AI is drawing many new users into this growing market the development of GPUs in HPC is intended to maximise computational performance for more traditional workloads.

This demand for flops is not going away anytime soon and in some people’s opinion will only increase as we reach the limits of Moore’s law – introduced by the hard limits of material science with ever smaller transistor designs.

Huang referred to the stalling of Moore’s law in his presentation at the GTC event. He claimed that single-threaded performance of traditional CPUs is now growing at an incredibly small rate of just 1.1 times per year. By contrast, the GPU performance is still growing by 1.5 times per year.

As Huang sees it, the GPU is the only way of continuing this pace of computational development as the traditional methods of increasing clock speed or larger processors have been exhausted.

‘Some people have described this progress as Moore’s law squared,’ Huang said. ‘That’s the reason for our existence, recognising we have to find a path forward, life after Moore’s law.’

Gambling on the future

Accelerator development does not come without its pitfalls, however. During the GTC keynote Huang mentioned that the latest GPU, the Volta V100, cost more than $3 billion in research and development.

This is a huge price to pay for a failed technology so you can be sure that Nvidia has a lot of confidence that AI and DL will help to accelerate adoption of GPUs even further.

The new architecture from Nvidia includes a number of bells and whistles that help to differentiate it from previous products. The most noteworthy of these are NVLink, Nvidia’s proprietary interconnect system, and HBM2 memory, which delivers as much as a 50 per cent improvement in memory bandwidth compared to the previous DRAM.

While this may seem like a risky venture Nvidia has done an excellent job in previous years of helping to spur adoption of its GPU technologies through education, training and developing a user community that can make the most of the software tools at their disposal.

Nvidia is now working hard to ensure that these steps are re-created with the latest AI software tools and frameworks such CUDA, cuDNN and TensorRT, which aim to help users build and train neural networks using Nvidia GPUs.

Supercomputers turn to Intel for machine learning

While it may seem that Nvidia is the only horse in town when it comes to AI hardware many of the other computing hardware manufactures have their own ideas about the best way to implement AI research.

In the world of ‘traditional’ HPC, many researchers are still looking towards the implementation and use of ML, DL and AI research but some of the supercomputing centres, both in the US and Europe, are choosing to explore the use of Intel hardware.

One example comes from the Texas Advanced Computing Center (TACC). The next HPC system to be installed at TACC, funded through a $30 million grant from the US National Science Foundation (NSF), is expected to reach more than 18 petaflops of performance more than twice that of the previous system.

The Stampede supercomputer housed at the Texas Advanced Computing Center

The new system will be deployed in phases, using a variety of new and upcoming technologies. The processors in the system will include a mix of upcoming Intel Xeon Phi processors, codenamed ‘Knights Landing’, and future-generation Intel Xeon processors, connected by Intel Omni-Path Architecture. The last phase of the system will include integration of the upcoming 3D XPoint non-volatile memory technology.

‘The first Stampede system has been the workhorse of XSEDE, supporting the advanced modelling, simulation, and analysis needs of many thousands of researchers across the country,’ said Omar Ghattas, a computational geoscientist/engineer at UT Austin and recent winner of the Gordon Bell prize for the most outstanding achievement in high performance computing.

‘Stampede has also given us a window into a future in which simulation is but an inner iteration of a “what-if?” outer loop. Stampede 2’s massive performance increase will make routine the principled exploration of parameter space entailed in this outer loop,’ commented Ghattas.

‘This will usher in a new era of HPC-based inference, data assimilation, design, control, uncertainty quantification, and decision-making for large-scale complex models in the natural and social sciences, engineering, technology, medicine, and beyond,’ concluded Ghattas.

The use of Xeon Phi processors may be curious to some that expect the TACC system to focus more on traditional CPU-based architecture, but in this converging world of ML and HPC, scientists and engineers are finding that many of these technologies have applications within their own research interests.

The second version of Stampede at the Texas Advanced Computing Center will use Xeon Phi processors

An example of this comes from David Schnyer, a cognitive neuroscientist, and professor of psychology at The University of Texas at Austin. Schyner is using the Stampede supercomputer at TACC to train a machine learning algorithm that can identify commonalities among hundreds of patients using Magnetic Resonance Imaging (MRI) brain scans, genomics data, and other relevant factors, to provide accurate predictions of risk for those with depression and anxiety.

‘One difficulty with that work is that it’s primarily descriptive. The brain networks may appear to differ between two groups, but it doesn’t tell us about what patterns actually predict which group you will fall into,’ said Schnyer. ‘We’re looking for diagnostic measures that are predictive for outcomes like vulnerability to depression or dementia.’

In March 2017, Schnyer, working with Peter Clasen (University of Washington School of Medicine), Christopher Gonzalez (University of California, San Diego) and Christopher Beevers (UT Austin), published their analysis of a proof-of-concept study in Psychiatry Research: Neuroimaging that used a machine learning approach to classify individuals with major depressive disorder with roughly 75 per cent accuracy.

In the future researchers will be able to use Stampede 2 and its more parallel Xeon Phi processors to train these algorithms much faster than they can today. Another advantage here for the TACC researchers is that the coding they have done previously is not lost when moving from CPU to accelerator as the Xeon Phi uses similar coding principles to traditional CPU architectures.

It remains to be seen which technologies rise to the top in this AI revolution, but one thing is for certain: ML and AI research is a market that contains huge potential to revolutionise not only science and engineering but also the very computing hardware upon which it is being implemented.