NEC, which has HPC divisions in both Japan and Europe, used SC25 to demonstrate its capabilities as a full HPC integration partner.

Oliver Tennert, Director HPC Solutions and Marketing EMEA at NEC Deutschland, says: ‘Some may perceive us as a box shifter, but NEC is a full service HPC solutions integrator. We cover every aspect of an HPC installation, including storage, processors, software installation, deployment and so on.’

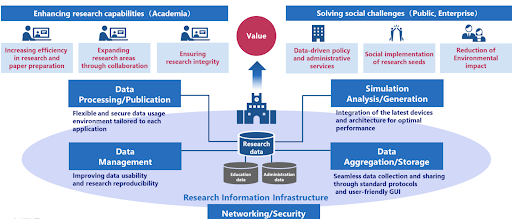

At the show, NEC was specifically demonstrating three products that address issues faced by research institutions. Among them was RII (Research Information Infrastructure), as Tennert explains: ‘RII deals with the infrastructure layer of research data management. This is a key issue for research organisations and universities around the world; they’re not just generating scientific data, they also need to manage it. You have to catalogue it, make it searchable and so on. RII takes care of the workflow for this at the IT layer.’

The value provided by NEC’s Research Information Infrastructure (RII). Credit: NEC

NEC has been developing and enhancing its through its collaborative research with The University of Osaka in Japan. A key component within RII is the Ultra-high-speed Data Transfer System (UDTS), which makes full use of a 100Gbps network via a multi-threaded, multi-session transfer architecture. For research organisations, the platform can dramatically reduce the disk-to-disk transfer time (for example, a 1TB file can be transferred in just 1.5 minutes) for large-scale data across a wide range of scenarios, such as data sharing and backup between sites, as well as international exchange of large observational datasets.

Another key component of RII is the Data Provenance System for HPC (DPS4HPC) which, as the name suggests, generates comprehensive provenance records covering inputs, outputs and processes for each job executed on the HPC system. Within a research environment, this facilitates the reuse and validation of data, promoting open science and collaborative research.

Separately, NEC was using SC25 to showcase its collaboration with Openchip & Software Technologies (Openchip), whereby it will develop a next-generation vector processor based on Open Architecture RISC-V, with vector extension and an AI matrix unit added, for HPC, AI and ML. Vector processing has been around for 40 years or more, but with Openchip’s expertise in RISC-V, NEC has signed a collaboration agreement that will see the co-development of the hardware and associated compilers and libraries. When the processor is released, Openchip will provide the accelerator, which NEC will then integrate into a customer-ready system.

This next generation of vector processor will offer enhanced performance for critical workloads, providing new levels of computational power for HPC, AI and ML, as well as scientific applications such as genomics, climate modelling and advanced simulations.

Cesc Guim, CEO of Openchip, says: ‘The new accelerator will combine the long-standing benefits of vector processing with the high precision required for scientific computing. The convergence of HPC workloads and AI in scientific research is happening now and this will help enable that in the future.’

The final area of focus on display at the NEC booth was ExpEther, a new technology that distributes the individual devices that make up a computer on standard Ethernet. ExpEther makes it possible to combine these connected individual devices to form a logical computer.

Using a CDI (composable disaggregated infrastructure), users no longer need to have all resources, such as GPUs, CPUs, PCIe switch and so on, in the same rack. Having all these resources in the same rack makes thermal management, power management and device maintenance complex.

With ExpEther, these resources can be separated into multiple racks by type, optimising heat management, power management and enabling maintenance by type. These separate racks can be in a different room, on a different floor or even in another building - the system allows these racks to be up to 2km apart.

Such an arrangement is beneficial for researchers, as it allows resources to be dynamically allocated, wherever the user is located on a campus, for example. ExpEther uses standard PCIe components and standard ethernet. Other than the ExpEther card in the machines, there is no special hardware required.

NEC recently began a proof of concept of the ExpEther product in partnership with the University of Osaka D3 Center. In this demonstration, a GPU (NVIDIA H100NVL) installed as a resource pool in the D3 Center IT Core Building on the university’s Suita Campus will be connected to a compute server (Express5800/R120j-2M) located in the main building. This connection will be established using ExpEther via a 100Gbps Ethernet optical fibre. In this environment, operational checks and performance verification will be conducted, focusing on AI applications that utilise GPUs. According to NEC, they have already completed one round of performance verification and have begun the next phase of demonstration, which is based on the assumptions of actual operation.

For more information, visit https://www.nec.com/en/global/solutions/hpc/index.html