As the size and complexity of data sets grow, storage technology is playing an increasingly important role in helping to accelerate scientific research. Increases in data requirements are driving users towards large-scale computing systems and increasing demand for larger and faster storage systems.

Scientists and researchers increasingly rely on storage to further their research – whether the focus is traditional high-performance computing (HPC) workloads, artificial intelligence (AI) and deep learning (DL), or just to process and store huge volumes of sequencing data.

Seagate is taking part in an EU funded research project to develop a new storage architecture that can better suit scientists working in extreme-scale HPC, AI and DL workloads or big data analysis. The innovatively named ‘Percipient Storage for Exascale Data Centric Computing 2’ (Sage 2) project is a follow-up from the initial work done to develop the architecture. Sage 2 now widens the scope to include both AI and DL workloads.

In a recent SIG IO UK digital event, Sai Narasimhamurthy, managing principal engineer at Seagate, gave a presentation on developments in the Sage 2 project. Narasimhamurthy explained that the scope of the project was to address ‘classic extreme computing applications’ that have ‘continually changing IO requirements’ that mean current hardware cannot keep up: ‘We also looked at the overlap between extreme computing and big data analysis in the Sage project but in Sage 2 we are bringing AI and DL applications to the table.’

In the presentation, Narasimhamurthy commented that AI and DL applications have unique requirements for IO and storage that are still evolving and require deeper analysis. ‘We are still trying to find out if there are unique classes of AI and DL applications that have unique IO requirements,’ said Narasimhamurthy.

The project is codesigned with particular applications and use cases by Seagate in the UK. The prototype system is housed at the Julich supercomputing center, based on four tiers of storage devices managed by an object file system rather than a traditional parallel file system. ‘We are trying to explore the use of object storage, not just as an archival tier, but also as a scratch for HPC and big data analysis. We already have that implemented fully.’

The full list of Participants in the Sage2 consortium are: Seagate (UK), Bull-ATOS (France), UK Atomic Energy Agency (UK), KTH Royal Institute of Technology (Sweden), Kitware (France), University of Edinburgh (UK), Jülich Supercomputing Centre (Germany), French Alternative Energies and Atomic Energy Commission/CEA (France).

This group combines both technology providers and application developers so that relevant codes for large scale HPC, AI and big data analysis.

‘We have finished the codesign with the applications and we are beginning to do the application porting now, especially the AI and DL applications. For the global memory abstraction we have fully defined and implemented the special API for object mapping,’ added Narasimhamurthy. ‘There is a PKMD available on ARM and emulation of NVDIMM on the Arm platform. We are also actively contributing to the mainline Linux kernel.’

While there has been significant progress, it should be noted that this is a three-year project and there are several features still being implemented. This includes the Slurm scheduler, TensorFlow and DCache for Mero, in addition to containers and high-speed object transfer, which are still currently in development.

In a blog post on the Seagate website Shaun de Witt, Sage2 Application Owner at the UK’s Culham Centre for Fusion Energy, gives insight on some of the application partners ambitions for the project. In the post he states: ‘Science is generating masses and masses of data. It’s coming from new sensors that are becoming increasingly more accurate or generating more volumes of data. But data itself is pretty useless. It’s the information you can gather from that data that’s important.’

‘Making use of these diverse data sets from all these different sources is a real challenging problem. You need to look at this data with different technology. So some of it is suitable for GPUs. Some of it is suitable for standard CPUs. Some of it you want to process very low to the actual data — so you don’t want to put it into memory, you actually want to analyse the data almost at the disk level,’ added Witt.

Sage2 evaluated applications using the prototype system housed at the Julich research centre. Principally this work will focus on operating with these new classes of workloads with optimal use of the latest generation of non-volatile memory. Sage2 also provides an opportunity to study emerging classes of disk drive technologies, for example, those employing HAMR and multi-actuator technologies

‘The Sage2 architecture actually allows us this functionality,’ de Witt explains. ‘It is the first place where all of these different components are integrated into a single layer, with a high speed network which lets us push data up and down and between the layers as fast as possible, allowing us to generate information from data at the fastest possible rate.’

The Sage2 project will utilise the SAGE prototype, which has been co-developed by Seagate, Bull-ATOS and Jülich Supercomputing Centre (JSC) and is already installed at JSC in Germany. The SAGE prototype will run the object store software stack along with an application programming interface (API) further researched and developed by Seagate to accommodate the emerging class of AI/deep learning workloads and suitable for edge and cloud architectures.

The HPC big data analysis use cases and the technology ecosystem is continually evolving and there are new requirements and innovations that are brought to the forefront from AI/Deep learning.

It is critical to address these challenges today without ‘reinventing the wheel’ leveraging existing initiatives and know-how to build the pieces of the Exascale puzzle as quickly and efficiently as possible.

If Sage2 intends to validate this architecture then there is still some way to go but they are hoping to provide the groundwork to deliver reliability and performance for future, large-scale science and research projects.

The storage system is being designed to provide significantly enhanced scientific throughput and improved scalability alongside an object storage system that can obfuscate the technical details of data tiering to achieve that performance.

While the Sage 2 project looks to develop future alternatives to current storage technology, today, scientists’ with fast, large scale storage requirements often select a parallel file system. This technology is well known to be highly scalable and deliver performance based on the underlying hardware technology.

Removing bottlenecks

Today many HPC manufacturers offer different approaches to tiered data management systems. That aim is to deliver just as much ‘fast’ storage as necessary to deliver performance with larger or less used files on ‘slow’ storage technologies to reduce the overall cost. However underlying the performance has to be reliability as downtime on large scale systems can be incredibly costly.

Robert Murphy, director of product marketing at Panasas comments on the importance of reliability and ease of use. ‘The majority of people in the research space use things like XFS and Isilon and the reason they pick those is because it works out of the box. Using Panasas today you can get the great reliability that we have always been known for and now the great performance and price-performance that off the shelf hardware can provide.

‘Whether you are running a single sequence or hundreds of thousands of sequences the system is going to behave in a predictable manner. All those other NFS protocol access systems work great to a certain point but when things really start to hammer them those systems will start to fall off in performance,’ added Murphy. ‘With Panasas what makes us unique from that perspective is that just like they did at UCSDD. They can take out the NFS protocol system and put in a Panasas that is just as reliable, just as easy to deploy as the systems they have been used to all of these years. But when they really load them up and more and more data is being thrown at the cluster the Panasas system gives them that great linear response curve as they scale up.

Panasas helped USDC to develop its storage architecture by developing more HPC focused technology in the form of a parallel file system. The extra storage capacity will help researchers accelerate their research by removing bottlenecks in processing and storing sequencing data that is critical to their research.

UC San Diego Center for Microbiome Innovation is a research centre on the USDC campus that has begun research focusing on COVID-19. It is hoped that a better understanding of the gut microbiome can help tell us more about the virus and its interaction with people. In April The University of California San Diego announced it was expanding a collaboration with IBM: ‘AI for Healthy Living (AIHL)’ in order to help tackle the COVID-19 pandemic.

AI for Healthy Living is a multi-year partnership leveraging a unique, pre-existing cohort of adults in a senior living facility to study healthy ageing and the human microbiome as part of IBM's AI Horizons Network of university partners.

‘In this challenging time, we are pleased to be leveraging our existing work and momentum with IBM through the AI for Healthy Living collaboration in order to address COVID-19,’ said Rob Knight, UC San Diego professor of pediatrics, computer science, and bioengineering and Co-Director of the IBM-UCSD Artificial Intelligence Center for Healthy Living. Knight is also Director of the UC San Diego Center for Microbiome Innovation.

Panasas’ Murphy noted that while some customers may have had bad experiences with complex file systems, Panasas focus on ease of use and accessibility has made deploying and managing a parallel file system much easier than just a few years ago. ‘The big thing is that other parallel file systems need to be tuned for a specific application either big files or small files or whatever specific workload is coming at it. With Panasas you do not have that requirement.’

One example is the Minnesota Supercomputing Institute which uses Panasas storage to power its supercomputer. ‘They have chemistry and life sciences codes but they also have Astrophysics, engineering and many more types of code that all run on the Panasas parallel file system,’ added Murphy.

Delivering performance

Delivering performance across all workloads is a benefit of the tiered approach to parallel file system storage. As Curtis Anderson, senior software architect explains, using the storage devices for what they are best at delivers the best price and performance.

‘The fundamental difference is in how we approach different types of storage devices. Underlying all of the software technology – because we are basically a software company,’ notes Anderson. ‘In a traditional system it will be 100 per cent disk or 100 per cent flash and they might have an LRU caching layer on top. What we have done instead is merge the two layers and then scale them out, so that every one of our storage nodes has a certain amount of “tiering” even though we do not call it that internally,’ added Anderson.

For example, the new ActiveStor Ultra from Panasas has six hard drives, SATA flash, NVMe flash and an NVDIMM stick. ‘The hard drives are really good at delivering bandwidth if all you do is give them large sequential transfers. We put all of the small files on top of the SATA flash, which is really good at handling small file transfers. The metadata is very latency-sensitive, so we move that to a database sitting on a super fast NVMe stick and then we use NV DIMM for our transaction log,’ said Anderson. ‘The net effect of all that is spreading the tiering across the cluster.’

This architecture is designed to ensure that there is just enough of each component to deliver the required performance without unnecessary costs. ‘In this way, every type of storage device is doing what it is good at. Hard drives are doing large transfers. SATA flash – we have just enough for the small transfers. We have just enough NVMe for the metadata that we need to store,’ concluded Anderson. ‘That is how you get both a cost-effective and broad performance profile.’

____

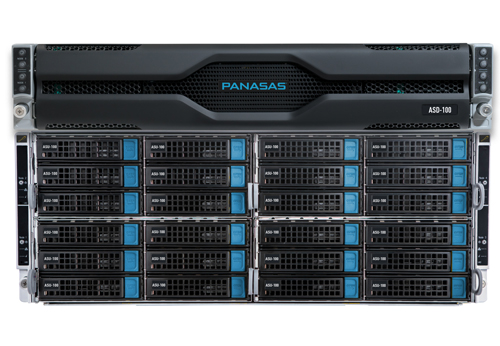

Panasas Featured product

The ActiveStor® Ultra turnkey HPC storage appliance offers the extreme performance, enterprise grade reliability and manageability required to process the large and complex datasets associated with HPC workloads and emerging applications like precision medicine, AI and autonomous driving.

ActiveStor Ultra runs the PanFS® parallel file system to offer a storage solution that provides unlimited performance scaling and features a balanced node architecture that prevents hot spots and bottlenecks by automatically adapting to dynamically changing workloads and increasing demands - all at the industry’s lowest total-cost-of-ownership.

https://www.panasas.com/products/activestor-ultra/?utm_source=scw