As a child, aeroplanes always held a certain fascination for me as I could never quite grasp the ‘magic’ that enabled them to take flight. As an adult, I must confess that I still don’t thoroughly understand the mechanics or the underlying equations that define the physics, but it is easy to recognise the incredible sophistication of these systems. And in that sophistication lies the challenge. Aerospace is competitive and the drive to optimise current designs, and indeed innovate new ones, is hitting the same stumbling block present in many other industries: that physical testing can be prohibitively expensive, especially where a system of this scale is concerned. Simulation technologies offer an obvious solution, but a hesitation still exists regarding their use.

According to Altair Engineering’s Robert Yancey, an aerospace executive recently remarked that because it is so expensive to qualify the composite materials used on current aircraft, the company is scared of switching materials due to the extensive cost that will be entailed if it has to do so again for the next plane. This reluctance is despite the fact that it sees many advantages in the new materials being developed. This use of complicated composite materials on aircraft is, however, at least in part, driving the use of simulation as aerospace companies begin to realise that if they can figure out how to use simulation to reduce the amount of physical testing, both on a plane and material level, it will allow them to take advantage of any new materials becoming available.

‘On the new airplane programmes using a significant amount of composites,’ adds Yancey, ‘there were problems that could not have been solved without simulation. That not only helped increase its use in the aircraft design process, but enabled companies to gain confidence that simulation would provide important answers that could solve the problems they were facing.’ Beyond that, he says, is an increasing realisation in the aerospace community that simulation needs to be able to replace many of the physical tests. The introduction of composite materials has amplified this extensively, because composites mean a rise in the material variables that need to be tested.

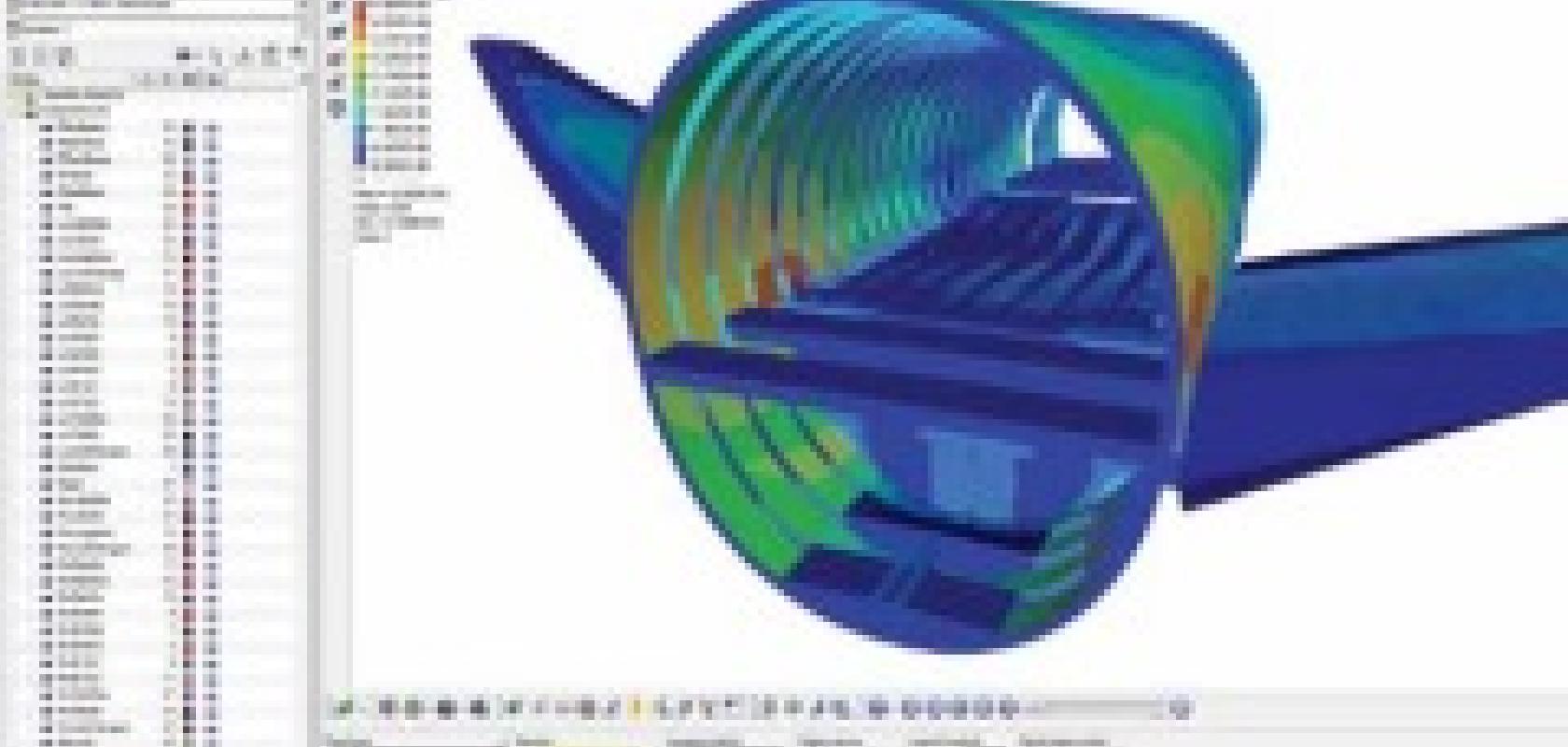

In addition to this increased acceptance, the ways in which simulation technologies are being exploited are also changing. According to Yancey, there is a definite interest, and indeed need, within aerospace for more multi-discipline analyses. ‘Traditionally, there have been islands of simulation activity and there is a growing trend to couple these aspects – for example, coupling aerodynamics with structural analysis, or thermal heating with structural analysis. That technology has been around on the university research level for a number of years, but is now making its way to commercial applications,’ he says.

Powering up

Trends like the coupling of activities are aided by the increase in computing power now being made available to simulation programs. While this provides the opportunity to run an increasing number of complex simulations, it also leads to one of its problems: growing datasets. Michael Papka, director at the Argonne Leadership Computing Facility, is acutely aware of this issue and explains that it is causing two main difficulties for research scientists: too much time to calculate and not enough disk space. ‘Typically,’ he says, ‘data analysis takes place once the simulation data has been collected and compiled. But for large-scale simulation runs involving hundreds of thousands of processors and processor hours, a scientist or engineer needs to analyse a data subset at several points along the way in order to decide how to proceed. This process has become a major challenge.’

Papka continues by stating that there are two data analysis approaches at the forefront of research: in situ analysis, where the analysis runs on the same compute resources as the simulation, and co-analysis, where the simulation data is moved to a separate resource for analysis. ‘Whereas the in situ approach requires the researchers to know what questions they want answered beforehand, co-analysis allows far more freedom and flexibility to analyse data on the fly,’ he explains.

According to Papka, co-analysis is an active research effort at the ALCF, and it is among the set of emerging tools and methods now enabling scientists to use supercomputers as tools for inquiry. He comments that there are still other issues to solve, such as latency, interaction and time-to-solution, but is confident that these challenges will be overcome. He adds that if scientists follow the in situ approach and don’t store the data, they run the risk of missing something.

‘Groups at other Department of Energy (DOE) national laboratories and at several universities are also working on ways to reduce the amount of data being written to storage and methods to provide faster insight,’ he says. ‘All of us working in the field are keenly aware that the days of writing everything to disk are gone, especially as we move toward exascale. Exascale will radically change how scientists and engineers make major discoveries, and the DOE is leading the charge to fund novel tools and methods that will allow them to optimise every step in the simulation and analysis pipeline.’

At Argonne, Papka’s research team worked with Ken Jansen, Professor of Aerospace Engineering Sciences, and his team from the University of Colorado to modify his simulation code. Papka explains that the modifications were done to connect a standard analysis tool at various points in time, to query and visualise the data, and to home in on certain phenomena – such as what was happening with the airflow. This enabled Jansen to quickly identify regions of interest that are moved out to a separate resource for fine-grained analysis is extremely useful for his application.

‘We demonstrated this capability at the 2011 Supercomputing conference when we ran Ken’s application on Intrepid, our Blue Gene/P machine, while he queried and analysed the data,’ says Papka. ‘Our infrastructure allows for “hooks” in the simulation code to plug in tools and easily visualise information in real time.’

Managing the load

In an effort to get the maximum possible utilisation out of compute systems, aerospace companies are investing in workload management software. Altair Engineering’s Robert Yancey comments: ‘Aerospace customers come to us and say that they want to be able to use hardware resources in the most efficient manner, with very little idle time, but the difficulty is that these companies place a large number of rules on their simulation jobs. Certain types of analyses may automatically get higher priority, for example, and so our software enables companies to program in their policies and procedures for the use of specific compute clusters.’ The benefit of this approach, he says, is that not only do users know that the work will be done as fast as possible, but IT departments are able to build and manage resources in a flexible manner.

Pietro Cervellera, also of Altair Engineering, adds that this is especially important for international enterprises using machines located throughout the world. ‘In environments where several engineers could each be running a simulation, creating many models and then running analyses that are very expensive in terms of compute power, it is important jobs can be submitted to the most efficient computational resource at any given moment,’ he says.

Efficiency is a necessity in any industry, and within simulation one of the biggest challenges, according to Cervellera, is a lack of harmony. ‘There are many unconnected islands within simulation, such as CFD, structural simulation and motion simulation for flaps and landing gear, and specific problems require the use of extra tools. One of the prominent trends at the moment is the need for these tools to be harmonised.’ He continues by saying that, in the past, there were attempts to deploy one simulation tool that would solve all problems, but each of these attempts failed and the industry is now agreeing that different expert tools are needed for individual simulation disciplines.

Altair provides a single working environment that can be used for mathematical modelling, result visualisation process creation, and data and process management. It provides a platform of integration that enables engineers to harmonise processes – a crucial point given that each process in aerospace requires a multi-discipline approach.

In conclusion, Cervellera states that the paradox commonly seen in aerospace is that on the one hand there is an increasing need for simulation reliability, complexity and accuracy – which is why multi-physics models and multi-scale models are coming into play – and on the other, is the need to simplify and standardise simulation processes. ‘Standard processes must be defined and applied throughout the supply chain in order to ensure the quality of the simulation work,’ he advises.

David Whittle, sector director for Space, Government and Defence at Tessella, discusses why spacecraft design brings its own set of challenges

With some aircraft systems, flight data can be gathered in advance and modifications made during the process, but with a spacecraft, once it launches it has to work. There really isn’t much scope for tweaking things at a later date, other than where the software is concerned, and we therefore need to be very sure that the system can be put it into safe mode should anything go wrong and that the mode is going to be very robust.

Working with organisations like the European Space Agency, we use the MathWorks toolset – and specifically Matlab Simulink – to create models and run simulations of engineering situations. One of the problems commonly faced within the industry is that people often design by simulation. In our experience, this is a mistake as it’s all too easy to jump in, use the tools and start putting things together without taking a step back. These toolsets are great to use, but people need to begin with an engineering knowledge of the problem and then use the simulation modelling to help solve whatever difficulty is being faced. Long before diving into the toolset, design engineers should take a blank sheet of paper and truly understand what it is they are trying to achieve. The design can then be verified or further extrapolated from the models.

The biggest challenge is ensuring that everything is accurate. The testing and simulation campaigns serve to prove the envelope of all the possible combinations of events because we have to verify, and be seen to be able to verify, that the system is robust in any situation that might occur – there is little chance of recovery otherwise. Another crucial challenge is finding the right staff who can work with both the simulation and modelling packages, but who also have the engineering understanding of the issues to enable them to design robustly in the first place.

In a space mission, we can’t conduct ground tests for every eventuality. While in orbit, for example, a spacecraft will have to do various manoeuvres, such as spins and twists, that simply cannot be duplicated in a lab using the real hardware. Instead, we try to simulate the space environment so that when we’re designing a control system that can cope with the firing of rocket motors, we have a means of testing how robust the algorithms will be in any given situation. In terms of the environment, we model the position of the sun and stars, the orbit dynamics and the effect of gravity, as well as characteristics such as the actuators that are going to move the spacecraft around, the mechanical systems, the movement of the fuel in the tanks, and the flexible modes of the arrays.

To a large degree, the Mathworks products are viewed as an industry standard in this area. We’ve been using the tools for many years and when feeding back any problems or issues we’ve encountered with the software, the company has been quick to respond. There were times when we had to write our own add-ins, but we haven’t had to do that for quite some time. Mathworks also offers the option of selling packages on another company’s behalf within a partner programme.

Looking forward, within the testing environment the trend is going to move more towards the use of modelling and simulation. If doing a missile test, for example, the design engineer should be modelling the expected behaviours and exploring the envelope as far as possible before using that mechanical test to verify the models. As costs continue to be a deciding factor, I believe that as an industry we will be running far more verifications through modelling, even in environments where it could be done in other ways.