What was the earliest example of digital data? The answer is surprisingly clear and probably earlier than you think. Some 30,000 years ago, in the Palaeolithic era, someone put 57 scratches on a wolf bone. These are arranged in groups of five, making the first known example of something that is still used from time to time: a tally stick. It is also an example of digital data. For almost all human history, however, such data was scarce, relatively few people handled it and it was easy to manage. Only half a century ago the Moon landings were controlled by banks of computers that together held less data than one of today’s iPhones. More than 200 million of these were sold in 2017 alone.

So we are now in an age of big data, but what makes it big? The term was coined in 2006, but five years earlier an analyst called Doug Laney had described the growing complexity of data through ‘three Vs’: volume, velocity and variety. That is, there is a lot of data, there are a lot of different kinds and it is growing very fast. Two more Vs have been added since: veracity (i.e. data can somehow be verified) and value (it can and should be useful).

The 57 scratches on that Palaeolithic wolf bone form one byte of data, as does any integer up to 255. If you are interested enough to be reading this, you probably own at least one device with a hard drive with a capacity of at least 1TB. You could fit one trillion virtual wolf bones, or, only just less implausibly, almost 20,000 copies of the complete works of Shakespeare onto such a hard drive.

Scientific data is a few orders of magnitude further on. Within the sciences, particle physics is at the top of the data league; the data centre at CERN, home of the Large Hadron Collider, processes about 1PB (1,000TB, or 20 million Shakespeares) of data every day. Biomedicine may be some way behind, but it is fast catching up, driven first and foremost by genomics.

Sequencing the human genome

The first human genome sequence was completed in 2003, after 15 years’ research and an investment of about $3bn. The same task today takes less than a day and costs less than a thousand dollars. There is probably no tally of the number of human genomes now known, but Genomics England’s project to sequence 100,000 genomes from people with cancer and rare disease and their close relatives within a few years gives an idea of what is now possible. The raw sequence data for a single genome will occupy about 30GB (30 x 109 bytes) of storage, and the processed data about 1GB, so you could theoretically fit a thousand on your home hard drive (if with little space for anything else).

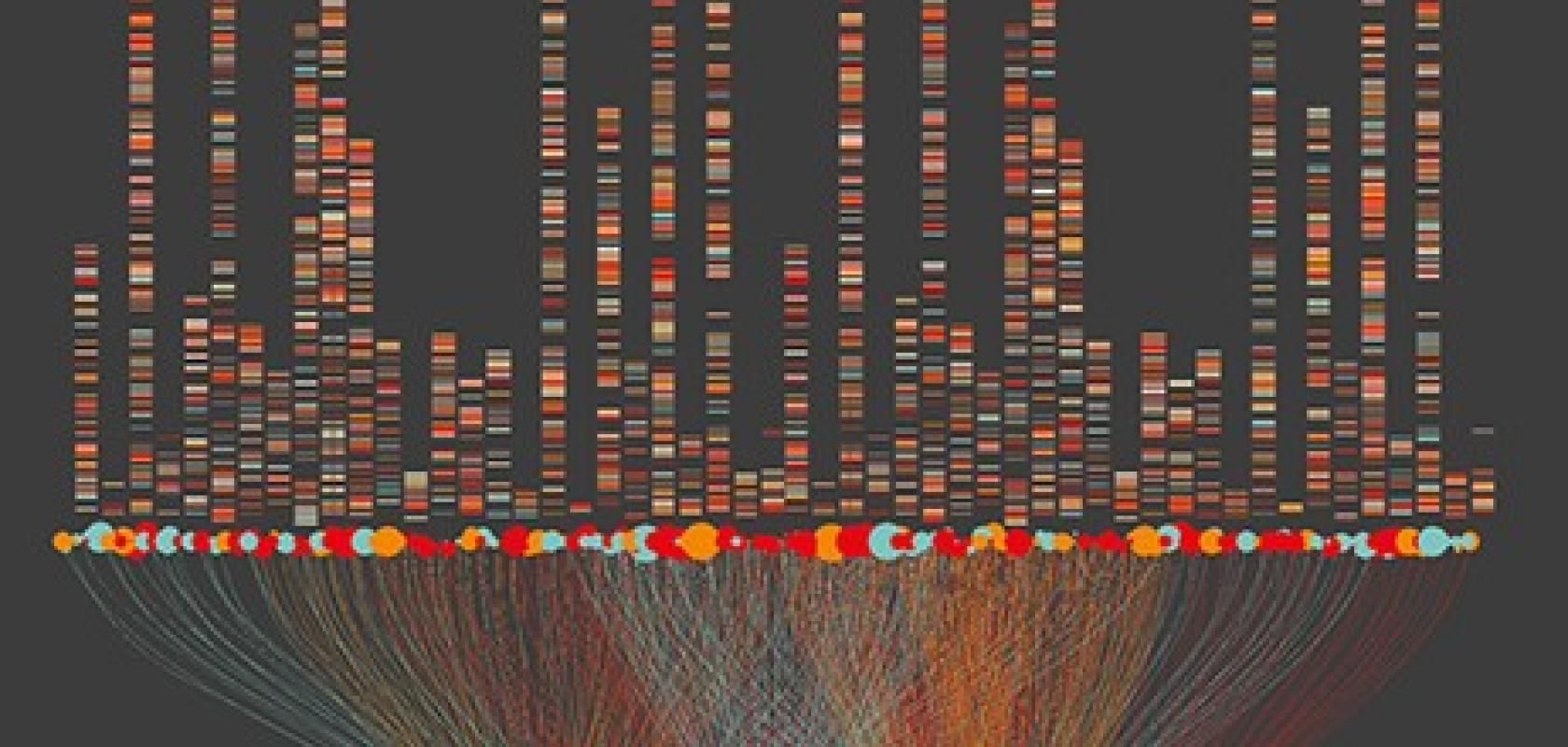

About a third of that first human genome was sequenced at the Wellcome Trust Sanger Institute, south of Cambridge. This is now one of the largest repositories of gene sequences and associated data in the world, and possibly the largest in Europe. Data in the form of DNA sequences – As, Cs, Gs, and Ts – pours off its sequencers at an unprecedented rate, initially destined for the Sanger Institute’s private cloud and massive data centre.

This centre was extended from three ‘quadrants’ to four in the summer of 2018, giving the Institute a massive 50PB in storage space. ‘We now generate raw data at the rate of about 6PB per year, but even keeping scratch space free for processing, we should have enough capacity for the next few years’, says the Institute’s director of ICT, Paul Woobey. The data is managed using an open source data management system, iRODS, which is becoming a favourite of research funding bodies in the UK and elsewhere. ‘One benefit of iRODS is that it is highly queryable’, adds Woobey. ‘This makes it easy to locate, for instance, all the data produced by a particular sequencer on a particular day.’

By itself, DNA sequence data means very little; like almost any form of data, it only becomes meaningful once it is analysed. Much of this analysis is done in-house, but many researchers worldwide need access to the Sanger data.

The growth in genomics data

Some years ago, scientists would routinely download Sanger datasets, but this is not always possible now because of their sheer size. Tim Cutts, head of scientific computing at the Institute, explains: ‘In an extreme scenario, it would take a user a year and cost a fortune to download all our data, even over the fastest commodity networks in the world’. This is clearly impossible, so the default now is for researchers to login to a server and analyse the data onsite or in the cloud. Another approach is to perform an initial analysis as soon as data is generated, save that and throw the raw stuff away. ‘Data analysis on the fly is commonly used in some disciplines, including particle physics and crystallography, but it is only recently becoming common in bioinformatics, which is a younger science’, adds Cutts.

Cutts expects that the sequencing capacity of the Sanger Institute will grow further over the next few years, putting further demands on its data storage and analysis capacity. However, the most significant growth is likely to be in another of big data’s Vs: variety. Increasingly, genomic data will be integrated with physiological and pathological data from individuals’ health records, accelerating the growth of personalised medicine.

‘We are developing datasets and analysis tools for integrating these diverse data types, so they can be used for drug discovery’, says Cutts. ‘But we have no ambition to become involved in this ourselves.’ BenevolentAI, headquartered in London and New York, is one of a new type of bioinformatics company that is using machine learning to look for patterns in such diverse data and to discover or re-purpose ‘the right drug for the right patient at the right time’.

The unique reputation of the Sanger Institute and, by association, the University of Cambridge in genetics and genomics, is matched at the ‘other place’ by a similarly high one in other data-rich biomedical sciences: epidemiology and population health.

The pioneering British Doctors’ Smoking Study began in Oxford in 1951 when academics Richard Doll and Austin Bradford Hill, both later knighted, sent a survey of smoking habits to almost 60,000 registered British doctors. Over two-thirds of the doctors returned questionnaires, and the data gathered was of sufficient statistical power to demonstrate smokers’ increased risk of death from lung cancer and from heart and lung disease within five and seven years respectively.

Over 60 years later, research in these disciplines has been integrated with the rest of Oxford’s biomedical research in a new Big Data Institute, part-funded with a generous donation from one of Asia’s richest and most influential entrepreneurs, the Hong Kong billionaire Li Ka-Shing.

This institute hosts the single biggest computer centre in Oxford and takes in data from all over the world. ‘Our largest datasets come from imaging and genomics, but we also hold gene expression and proteomics data, as well as consumer data and NHS records’, says the Institute’s director, Gil McVean. ‘And ‘imaging data’ itself covers a huge variety of modalities and therefore of image types, from whole-body imaging and functional MRI brain scans to digital pathology slides’.

The Big Data Institute holds all the data collected through one of the largest epidemiology initiatives yet launched: the UK Biobank, designed to track diseases of middle and old age in the general population. This recruited half a million middle-aged British individuals between 2006 and 2010. Initially, they provided demographic and health information, blood, saliva and urine samples, and consented to long-term health follow-up.

This information, including universal genome-wide genotyping of all participants, is being combined with health records including primary and secondary care and national death and cancer registries, to link genetics, physiology, lifestyle and disease.

Many participants have also been to answer further web-based questionnaires or to wear activity trackers for a given period, and the genetic part of the study is still being extended, as its senior epidemiologist Naomi Allen explains. ‘A consortium of pharma companies, led by Regeneron, has funded exome sequencing – that is, sequencing the two per cent of the genome that actually codes for functional genes – of all participants. They hope to complete the sequencing by the end of 2019 and UKB will make the data publicly available to the wider research community a year later.’ The eventual, if ambitious, aim of UK Biobank is to sequence all 500,000 complete genomes over the next few years and release that data, too, to the research community.

Ensuring data access and anonymity

All academic and even some commercial biomedical research is now carried out under open access principles. These can be hard to apply to human health data, however, as issues of privacy are also important. The UK Biobank restricts its data to bona fide scientists seeking to use the data in the interest of public health; as such, the type of research projects varies widely. All the data are ‘pseudonymised’ by using encrypted identifiers that are unique to each research project, before being made available to researchers.

However, with the amount of detailed data available nowadays, it is becoming harder to guarantee anonymity. ‘Some datasets, particularly those including genomic data, are so rich that it becomes possible – if very hard – to track down an individual from their data, even if all identification has been removed’, explains McVean.

One perhaps drastic solution is the creation of artificial data records. These have similar characteristics to patient records and can be fully analysed but represent no individuals. Edwin Morley-Fletcher, CEO of Rome-based technology consultancy, Lynkeus, has pioneered machine learning methods for creating this type of data for e-clinical trials. ‘We use a method called recursive conditional parameter aggregation to create data for a set of artificial patients that is statistically indistinguishable from the real patient data it was derived from,’ he explains.

Any health study that depends on volunteers providing data can also suffer from the problem of volunteer bias: people interested enough in their health to join such studies are generally likely to be healthier than the population as a whole. It is possible to collect data directly from the millions of Fitbit fitness trackers in regular use, but this would represent an even more biased dataset, as most people who own Fitbits are richer and more tech-savvy, as well as more health-conscious than average.

Martin Landray, deputy director of the Big Data Institute, was involved in the Biobank study of exercise and health, which overcame some of this bias by sending accelerometers to 100,000 volunteers and asking them to wear them for a week.

Each one returned 100 data-points per second, generating a huge dataset. ‘This presented a big data challenge in three ways: in logistics, in ‘cleaning’ the raw data for analysis and in machine learning: picking out movement patterns associated with each type of activity,’ he says. ‘Only then could we – after discarding outliers – detect any relationship between actual (as opposed to self-reported) activity levels and health outcomes’.

But whose data is it anyway? All biomedical data, including ‘omics data, is derived from one or more individuals, and much of the data collection depends on willing volunteers. And, particularly as anonymisation cannot be fully guaranteed in all circumstances, all these individuals as ‘data subjects’ must have some rights over their data.

Anna Middleton, head of society and ethics research at the Wellcome Trust Genome Campus (the home of the Sanger Institute), leads a global project called ‘Your DNA Your Say’ that aims to discover how willing people are to donate and share their genomic and other biomedical data. ‘We are finding that people are more willing to share their data if they understand what it means, and if they trust the scientists who will be using it,’ she says. ‘Unfortunately, levels of knowledge and trust are low in many countries, and scientists must do more to communicate the meaning and value of our big data and how it drives our work.’