Scientific Computing World gathered a panel of leading experts to discuss the impact that effective data management can have on driving research and laboratory efficiency, as well as enabling future capabilities. Mass spectrometry, a crucial tool for several sectors, was a key focus for several of our panellists.

| Read the full report from our panel here |

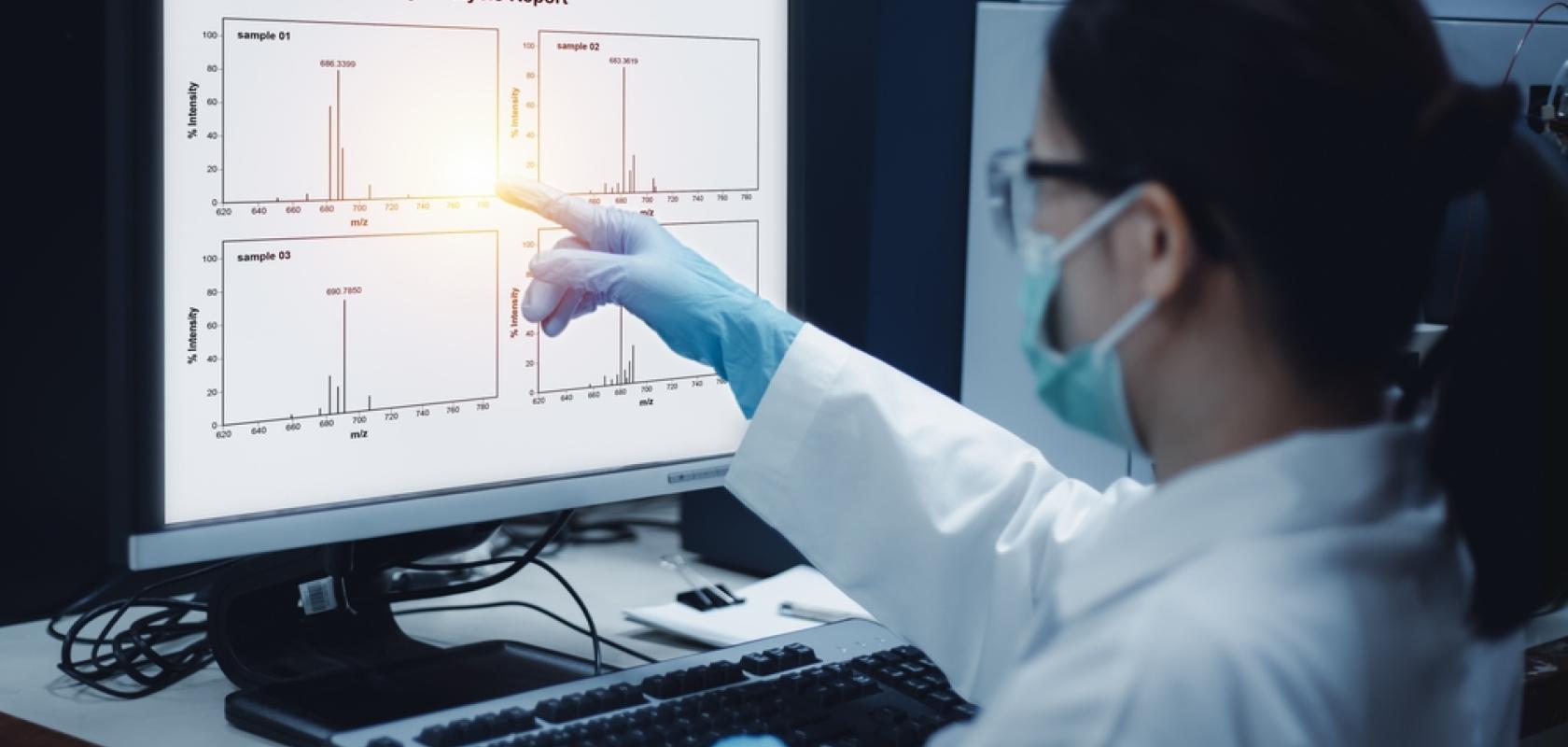

The primary challenge of mass spectrometry – at least as far as the data it generates is concerned – is not just sheer volume. There are several other aspects to factor in, such as how it is collected, in what format and how well structured and annotated it is.

Lisa M Bacco, Principal Scientist at animal health drug company Zoetis, says: “Mass spectrometry is such a powerful tool that it can be used in almost every sector. Even within pharmaceuticals and biotechnology, you can use it for biomarker discovery, drug discovery, development, QA/QC of drugs in manufacturing and so on. So, identifying data challenges in mass spec is unique to its specific application and use cases.

“I’m involved in proteomics - which, on the Gartner Hype Cycle, has been in the Trough of Disillusionment for the past two decades as we’ve become overwhelmed with the speed and scale of technological advancements. Now we’re climbing the Slope of Enlightenment as we approach the production phase – especially in the context of biological mass spectrometry. The challenges we face are really around data sovereignty. This includes ensuring the data is stored, processed and shared following best practices. On the practical level, this includes the ability to store and process our data at a scale that supports clinical applications.

“On scalability, you may have hundreds or thousands of patients, where data is collected longitudinally. The scale of proteomics data is now up there with genomics – and in some settings, laboratories can process 300-500 samples per day. This scale brings with it huge cost in terms of storage, as well as the need for greater processing power.”

Prashi Jain, Director, Drug Discovery at Iterion Therapeutics - a clinical-stage pharmaceutical company based in Houston, Texas - adds: “My background is in medicinal chemistry, where I initially worked with LC/MS (liquid chromatography / mass spectrometry) to monitor my synthetic reactions, mainly with small molecules. Back then – about a decade ago – that process was fairly straightforward. As the field and my responsibilities evolved, my focus has shifted to more advanced applications such as PKPD (pharmacokinetic pharmacodynamic) analysis and DNA encoded libraries. These projects generate large, complex data sets that require substantial processing power and storage.”

Adapting to AI - and other modern data demands

It’s not just current data that one has to consider – it’s also legacy data. “We have extensive historical LC/MS datasets,” continues Jain, “that could still be useful for applications such as deciphering or confirming reaction mechanisms. The difficulty lies in integrating the older data with current databases so that it can be used with today’s modern tools, including AI.

“Fortunately, this issue mainly affects older data and legacy instrumentation. Newer instruments are typically designed with integration in mind, which makes things easier in practice.”

Legacy data is also an issue faced by Lars Rodefeld, a scientific consultant who spent 27 years at Bayer CropScience. “Bayer is a conglomerate, built out of several different companies,” he says. “What that meant was we had lots of different instrumentation and data systems, making it virtually impossible for them to talk to each other. There are tools out there to help you overcome this, of course, but at the outset, they were never designed to talk to each other.

“Usually, instrumentation in the field of process chemistry will sync to a LIMS system. But, to pull data out of a LIMS and use it with modern AI tools is really difficult.

“So, we put in a strategy to overcome this, naively thinking it might take two or three years, but it will take almost a decade and become extremely costly. In some areas of scientific research, connectivity is essential and everybody in the company supports it. If you’re in analytical chemistry, you’re still sometimes using the equipment itself and a USB stick.

“Mass spectrometry equipment is very expensive – and that leads to long usage times. In our team, we had more than 30 mass spec devices, which were on average seven years old. We had some brand new kit and some really old stuff still running Windows XP on island servers that were never designed to be connected to anything at all.

“Comparatively speaking, NMR (nuclear magnetic resonance) spectroscopy is easy – there's one provider and one platform. With mass spec, you have multiple providers and multiple platforms that don’t talk to each other, making it very difficult to connect to data landscapes providing FAIR data.”

FAIR data and formats

Encouraging the use of FAIR data and supporting supply-side pharma companies is very much what Birthe Nielsen does in her capacity as a consultant with the Pistoia Alliance, a global, not-for-profit alliance of life science companies, vendors, publishers, and academic groups. “We work specifically on the ‘how to’ for data management and governance,” says Nielsen. “This is not competitive, so in an area with perfect use cases for pre-competitive collaborations.

“R&D is increasingly multi-omics, meaning you have to link many different platforms. We ran a project recently on how one tackles the issue of taking one proprietary format and importing it into another vendor platform. Normally, these transfers take a lot of human effort through transcribing or translating data! We developed a data model that converted everything into a standardised format that could transfer data across different vendor systems in a digital format. Ontologies serve as the semantic glue between datasets, so MS results can be integrated with NMR, bioassay or LIMS metadata.

“This lack of portability of data has a huge impact on costs as it decreases reproducibility. It also has a time cost, of course, tying up equipment and people for longer than is needed.”

For Kevin McConnell, Genomic Informatics Lead, LifeMine Therapeutics, vendor-specific file formats are the biggest challenge.

“At LifeMine, we deal with metabolomic data, dealing with small molecule natural products,” he explains. “We’ve been working on extracting secondary metabolites from fungi as new therapeutics for various diseases.

“We have sequenced more than 100,000 fungal genomes in our collection. We select stains of interest to go through fermentations, where we perform transcriptomics, then metabolomics collecting LC/MS data.

“A lot of our initial work was very targeted. We’re looking for biosynthetic gene clusters (BGC), in particular genomes, to see if we can mine its secondary metabolite. As a result, we’re starting to build up this huge database of mass spectrometry data. Initially, it was all tied up in vendor-specific file formats, but we saw an opportunity to interrogate these datasets to be able to look for metabolites across all of our fungal genomes, not just for the targeted BGC.

“We have many thousands of mass spectrometry runs, so we undertook a process of data conversion, using tools like MSConvert and MZMine, to get the data into a common format that we could then load into a database.

“Once in the database, we used embeddings and strings of binned M/Z (mass to charge) intensities of MS2 spectra to make that data searchable with cosine similarity. After that standardisation, we’ve been able to search across hundreds of experiments and tie together our work in genomics, transcriptomics and metabolomics to identify the secondary metabolite from a BGC.

“It was a fantastic project; it took a long time, but we worked with the analytical chemists to help them understand why we were doing it.”

Consider the 'why'

To understand the challenges, you have to start with clarifying why you’re researching your particular area, according to Sebastian Klie, CEO of biotech company Targenomix (now part of Bayer).

“We are a small research organisation,” he says, “with around 40 people focusing on systems biology. We cover all of the omics technologies – transcriptomics, proteomics and, in particular, metabolomics. For the latter, we conduct targeted analysis of small molecules or drugs, as well as performing untargeted metabolomics to assess alterations of metabolic pathways in vivo.

“The ‘why’ is the most important consideration for anything we do: in our research, we need to be able to assess and contextualise every data point that we ever generated. These are in the millions, for the tens of thousands of samples we’ve generated over 15 years. We’re still able to analyse those first data points and compare them to the last data point generated.

“As a small company, so far this has worked well and has been cost-effective. The challenge comes with enterprise scalability of centralised data storage, particularly if there are mergers and acquisitions that may require the integration of heterogeneous data landscapes into larger organisations. In this case, you may need to revisit policies and set up new standardised ways of working, often resorting to storing processed data rather than raw data.

“The biggest challenge to me is clearly about metadata, because normalisation of data is key, particularly in mass spec. If you are performing quantitative analysis, you want to understand confounding effects in your experimental setup.”

Our panel also discussed ways to overcome these challenges, from successful standardisation to data policy development. To read more, download the full report here. |  |