With exascale fast approaching, the HPC community is now looking to the last generation of pre-exascale supercomputers to see what architectures, tools and programming models can deliver before the next systems are installed in the next three to four years.

While we do not know how far developers will be able to push application performance it is possible to derive insight data from the latest generation of flagship HPC systems such as the Collaboration of Oak Ridge, Argonne, and Lawrence Livermore (CORAL). The first of these systems, Summit, installed at the Oak Ridge National Laboratory, is already breaking records for HPC and AI application performance.

The CORAL systems being installed in the US National Laboratories, similar-sized systems in China and the development plans for a European exascale system are already shedding light on what the architectures and programming models will look like on the first generation of exascale supercomputers.

Many of the choices on hardware have already been put in place, with development well under way. It is likely exascale systems will take a similar path, because to start again from an architectural perspective would require a huge amount of work to prepare both the software and tools running on the next wave of leadership-class supercomputers.

In order to deliver real exascale application performance on these systems, it is important that the compilers and other software tools are already well understood by the user community. Also, the applications must be tested on similar architectures. While this may be an order of magnitude, smaller scale developers can test their applications before they are given access to a full-scale system.

Jack Wells, director of science at Oak Ridge Leadership Computing Facility, explains that the team behind the Summit system have gained insight from past system upgrades that maintaining as much of the programming model as possible helps to accelerate the move from one system to another.

‘When we jumped to Titan it was a pretty significant change. It was much newer then and when we made the decision in 2009 and got the machines all pulled together in 2012 it was harder to use the tools, the compilers and the programming approaches were much less mature. Even working just on Titan, things got easier over time, so you could accomplish these things in marginally less time.’

Some of this difficulty was based on the timeframe and the relatively new introduction of GPUs to HPC systems which meant that the tools and the user expertise had not matured sufficiently. Even so, the team behind the Summit system aimed to keep the programming model as similar as possible, to help accelerate uptake of the system by the user community.

‘We have tried to keep the programming model as much the same as we could when moving from Titan to Summit. Of course, we don’t have a Cray compiler on Summit, we have an IBM compiler but we brought PGI along with us and that was available on Titan. We have tried to keep the transition of the programming model as easy as it could be.’

Wells stressed that, even with the correct tools, HPC is not easy per se. The main aim is to try and reduce the difficulty as much as possible and to provide a familiar environment for the user community to help drive sustainable application development.

‘Those are the kind of things that we need to concern ourselves with. Sustainability of applications software and programming models on our supercomputers,’ concluded Wells.

Co-designing the next generation of supercomputers

The development of exascale systems has been focused on co-design for a number of years. European efforts such as the Mont Blanc project which explored the use of Arm-based clusters, have formed the foundations for the European Processor Initiative (EPI). In the US, projects such as FastFoward and FastForward2, have looked at different aspects of HPC hardware and programming such as processors, memory and node architecture for future supercomputing platforms. It is clear that no one company or government organisation can design a modern supercomputer. In order to continue to drive innovation, energy efficiency and performance, there needs to be considerable effort placed into partnerships between academia and research organisations, hardware companies and application developers funded through government-led projects that help subsidise the cost of exascale development.

The development of the CORAL systems, specifically Summit, has been no different. Geetika Gupta, product lead for HPC and AI, explains the importance of collaboration between Nvidia and Oak Ridge in designing Summit and influencing the development of GPU technology.

‘Oak Ridge and some of the other partner labs started exploring the use of GPUs way back in 2008 or 2009. At the time they started with some cards they felt that they had the right level of parallelism. Some of the life sciences codes, such as Amber, can take advantage of the compute cores available as part of the GPUs,’ said Gupta.

‘In 2013 they installed the first GPU-based supercomputer called Titan. That was based on the Kepler GPU architecture. I was the product manager at that time for the Kepler series of products, so I was closely involved with the Titan deployment in the 2012/2013 timeframe,’ added Gupta.

Since that time, Nvidia has been continually involved with Oak Ridge and the Oak Ridge Leadership Computing Facility (OLCF) working to understand the requirements of future systems and the applications used by the user community. Gupta stressed that this feedback on applications and workloads helps to inform what Nvidia should be building in future generations of GPU architecture.

‘When the time came to come up with the follow up to Titan, Nvidia was closely involved. The way they had described the workloads, we saw that there was a need to increase GPU-GPU communication. In 2014 when we started thinking about the next system, we knew that we needed a fast GPU-GPU interconnect and that formed the basis for NV Link,’ said Gupta.

‘We continued to work with them and analyse the workloads to find the bottlenecks and see what new things were emerging, and that helped to influence the basis of the designs for the Volta GPU architecture,’ stated Gupta.

However, not all trends and changes in the development of computing can be neatly predicted years ahead of time. Early machine learning and deep learning methods have been around since the 1950’s but the convergence of data availability, GPU acceleration and algorithmic improvements led to the explosion of deep learning across scientific and industry in the last few years.

Nvidia led much of the hardware for this with its GPUs, so it made sense for them to add in hardware specific to deep learning which would accelerate applications. This led to the development of tensor cores, which are now included in the latest Nvidia GPUs, including the Tesla V100 that is used in the Summit system.

Adding new hardware to the HPC toolbox

‘While all this was happening we could see that AI and deep learning was also emerging as one of the primary tools to analyse large amounts of data, and that was the reason that we decided to include tensor cores in the Volta GPU architecture,’ said Gupta. ‘Now you can see how the V100 can be used to enable computational science and they have support for tensor cores, which can be used to assist scientific computation with AI and deep learning.’

‘CUDA cores are great for doing FP64 based matrix multiplication but there is a lot of work that can be done at lower precision, so the tensor cores have been added to the Volta GPU architecture to optimise that lower precision computation,’ added Gupta.

Gupta explained that the tensor cores take half-precision floating-point format (known as FP16) as an input. Tensor cores carry out operations such as matrix multiplications and accumulate that data into single precision floating-point format (FP32). ‘The data pipeline for the tensor cores has been designed to do these operations much faster, just because of the way that the cores are fed data. They can do almost 12 times the operations that a CUDA core would be able to.

‘It is quite interesting that a scientific workload on one single GPU architecture can get a mixed precision computation. They can decide where they want to use low precision for coarse grain analysis, and then in some of the later iterations,’ stated Gupta.

‘People are looking at AI and deep learning as a new tool in their toolbox. It’s not something that is going to replace the existing way of doing scientific computation, but it is definitely a new tool that they can use to do certain types of workloads and speed up the process. The other reason is the amount of data that scientists need to analyse. It takes much longer if you are just relying on traditional methods,’ Gupta concluded.

Getting applications up and running on Summit

As the Summit supercomputer has now been installed and is awaiting full acceptance, the OLCF has been inviting application users onto the system to test application performance and help the team to remove any bugs ahead of full production at the beginning of 2019.

The OLCF has several programmes to develop and test applications for new and existing users. The Innovative and Novel Computational Impact on Theory and Experiment (Incite) programme is one example of the US Department of Energy’s efforts to allocate computing time to users across the globe.

The OLCF also run the Application Readiness Program and the Directors Discretionary Program (DD) among other projects to help new and existing users obtain access to the Summit system.

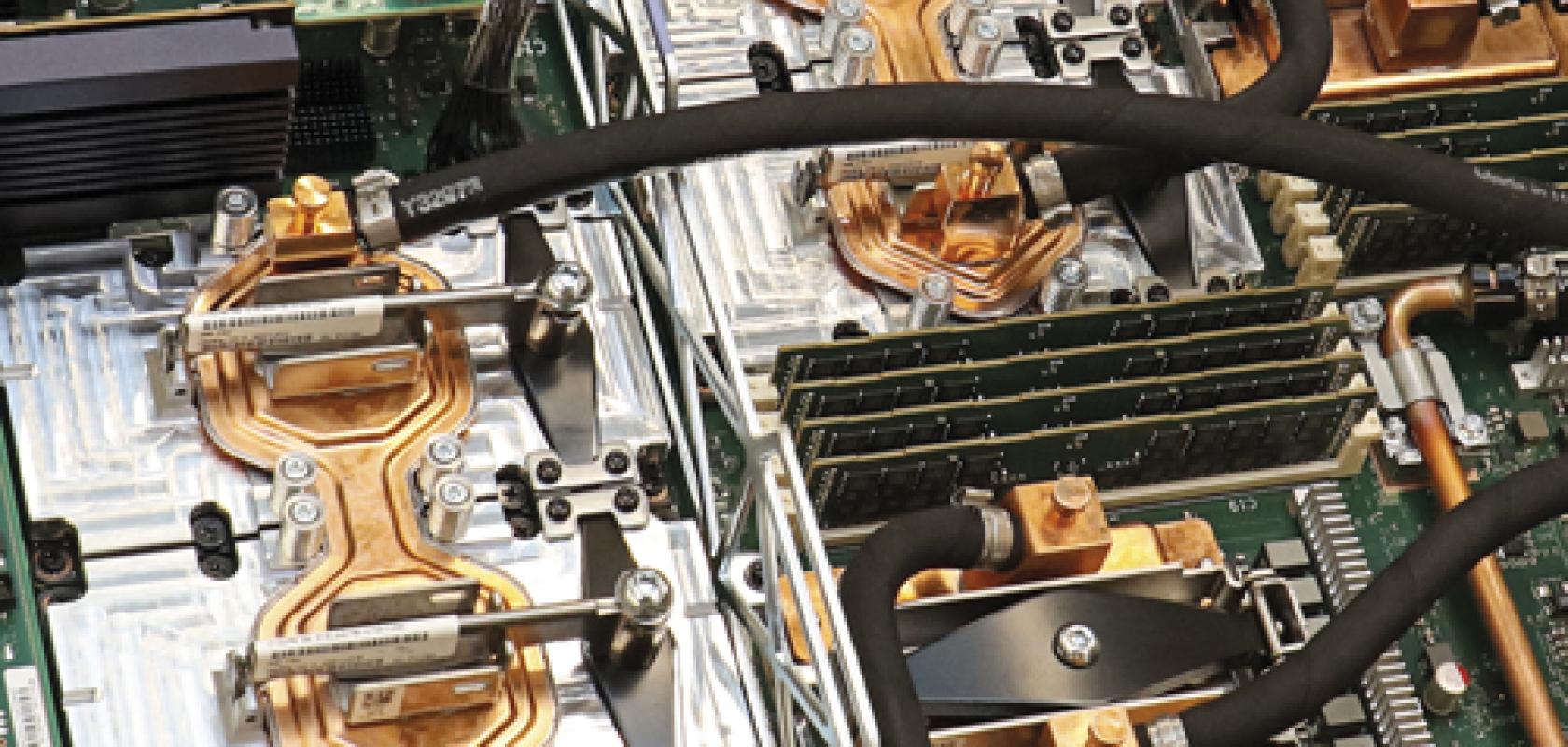

Oak Ridge also invited 13 projects to be part of its Center for Accelerated Application Readiness (CAAR). A collaborative effort of application development teams and staff from the OLCF Scientific Computing group, CAAR is focused on redesigning, porting and optimising application codes for Summit’s hybrid CPU–GPU architecture. This gives users early access to explore the architecture but users can also receive technical support from the IBM/Nvidia Center of Excellence at Oak Ridge National Laboratory.

‘Our commitment is to be able to start the INCITE user programme in January. Our plan is to accept the full system soon and then have the fourth quarter of the calendar year for an early science period. This will allow us to get some additional hero users on there, to knock the cobwebs out for the system but we will start INCITE in January. Those proposals are being evaluated now.’

Wells stressed that it is important that many of the users come from different application areas but also from different institutions, from both the US and other nations, in addition to catering to US national interests. While INCITE looks at developing a broad community of users, other programmes focus more on US interests.

‘The big-user programmes are INCITE, ALCC programme. When the leadership computing facilities were established the resources should be allocated based on merit, and it should be available to US industry, universities, national laboratories and other federal agencies. The money comes from the DOE office of Science programme but the user base is much broader than that.’

‘The DOE implements this complicated combination of programmes through its “user facility model”, with the same business model that it would use for the light sources, nanoscience centres or the joint Genome Institute. It makes it available to the world so it is available to international competition and we do that through the INCITE programme,’ added Wells.

‘DOE Office of Science programmes have the need for capability computing too, and so they are in the best position to understand those programmatic priorities. They have a programme where they can support projects of interest to the DOE. The INCITE programme does not take the DOE’s interests per se, we don’t consider that it is based on scientific merit as determined by peer review.

These programmes provide many options for users to gain access to Summit or the other national laboratory computing systems but Wells explains that for new users, the DD programme is usually the first step.

‘You get started with the DD programme because the responsibility for that is given to me and the team that I lead, so that is part of my job. A lot of people do this role but I am involved in getting users started on the machine,’ stated Wells.

‘We have three goals for our DD programme, one is to allow people to get preliminary results for these other user programmes I have mentioned, because it is competitive and they need results to show and strengthen their proposal,’ added Wells. ‘Then we do outreach to new and non-traditional use cases. In the past we didn’t have so much data analytics and AI and, in order to get people started in these areas in 2016 and 2017, we gave several small allocations to test out their workflows and to try out the codes. This allows them to get some performance data and maybe write us a few introductory papers.’

Wells noted that the third use of the DD programme is to help support local teams that need help in order to get a further allocation, or to support other work done at the DOE or national laboratories. ‘We use a small amount of this time to help people get started at the lab. Maybe we have a new hire or an internally funded project that hasn’t had time to compete for a big allocation on a supercomputer. We will support a local team in that way.’

Application readiness

Many of the so-called ‘hero’ users and their applications were given access to the Summit system through the Application readiness programme. This is key to the OLCF, as it gives an indication of the kind of applications that will be capable of using the entire system.

‘The majority of those projects have so far been able to demonstrate that they can use around a thousand or two thousand nodes. Eventually, almost all of the projects will be able to use the whole machine but they have not demonstrated it yet, because they have not yet been given access to the whole machine,’ said Wells.

‘We did open up broader access to an early science call for proposals, where we had 64 teams ask for access. We tried to prioritise their early access also, as this enabled them to get some results.

‘Not everybody got equal access, so it’s not like it was a fair thing but we wanted to get as many people on, as early as we could, in the middle of all the development activities. From this early work, a set of them wanted to go for the Gordon Bell Prize. That visible competition is something that we want to support, so we really enabled those teams to have even more access,’ added Wells.

‘We had something like seven teams submit papers for the Gordon Bell Prize and, as we recently announced, five of them were finalists,’ noted Wells. He also stressed that this is a huge achievement, considering that the Summit system has been through full acceptance, yet still has a number of bugs. The teams were also using the test and development file system, as the full storage system has not yet been accepted.