Using the initial bank account tutorial model, this shows SIMILE's modelling screen (top left), the tabbed execution screen with plotter showing (bottom left) and the flow equation box with keypad, sketch graph pad, etc, (right).

Using the initial bank account tutorial model, this shows SIMILE's modelling screen (top left), the tabbed execution screen with plotter showing (bottom left) and the flow equation box with keypad, sketch graph pad, etc, (right). With the above question, 14-year-old Sophie Amundsen (protagonist in the teenage best seller Sophie's World) is invited to consider the atomic nature of matter as the first step in a journey beyond the limits of her imagination. Lego (or Meccano, or any similar construction toy) can be an equally powerful metaphor for other ideas, scientific and otherwise, leading the way to other journeys. Modular reusability has always been a virtue and a refuge from insanity, at least as far back as we can peer into human history and probably long before that. And if some of the bits can be left assembled into higher level substructures, reusable later, so much the better.

In practice, the lesson often seems to be forgotten, either through sloth or in the rush for go-faster stripes. So does the principle that the idea of the model must never be confused with either its implementation or the reality which it models. For me, at least, relearning those lessons in relation to mathematical modelling has always been a periodic necessity; enter, at this point, Professor Muetzelfeldt of Edinburgh University with a particular viewpoint and a spin-off company (Simulistics) offering a product (SIMILE) to support it. This is the best bit about writing for Scientific Computing World – I'm regularly taken in hand and shaken out of my groove with little say in the matter, which keeps me fresh. Professor Muetzelfeldt responded to my exploration of modelling possibilities in a clutch of reader-nominated products, in particular the use of Atebion for a predator/prey demonstration. So, for the best part of three months, I've been using SIMILE for my own purposes and working through the philosophy which underlies it.

Way back in the days when a large organisation paid me good money to sit in a windowless room modelling sociomilitary scenarios, this principle manifested itself as a pile of battered cardboard panels carrying rectangular or hexagonal grids under transparent stickyback plastic film. Mainframe computers were available but time on them was scarce, failures and errors frequent. My cardboard panels were a more efficient way to initially explore and structure a problem.

The particular class of problem to which those scenarios belonged goes by various names, of which the one used in my context at the time was 'ekistic' – with 'fuzzy' and 'messy' as alternative descriptors. Much of the groundwork thinking had been done by C A Doxiadis or others associated with his theories of human habitation, which accounts for the word, but they are not by any means limited to such areas. I've recently seen an ekistic model of corporate development within the global internet context. A couple of years ago I built one in pursuit of puzzling fault clusters within a factory, though I am more usually concerned with populations of organic entities (human or otherwise). A historian of my acquaintance is using one to investigate the collapse of an ancient empire, while in a nearby building a chemist similarly investigates the collapse of a new aircraft.

Progressive stages in the life of a SIMILE modelled arboreal community, each tree being a separate instance of the same single submodelled tree.

Progressive stages in the life of a SIMILE modelled arboreal community, each tree being a separate instance of the same single submodelled tree. A typical candidate for ekistic modelling will contain a large and sprawling context where quite a lot of the picture can safely be painted using a broad brush, but some parts need greater detail. There will be layering, probably, but not necessarily, hierarchic. The submodelling of the detail will often be of a different type from, but closely interacting with, that of the larger context. Ekistic modelling is usually exploratory and often fast changing – a different kettle of fish from the sort of operational modelling that Nick Morris described in the last issue of Scientific Computing World. The work will often be done by disparate individuals or teams, separated by both geography and professional background, perhaps even unaware of each other until late in the day. All of this argues for a transparent, copiously documented modelling medium in which building blocks, assembled substructures and connective processes can be identified at a glance by a stranger who wishes to learn from, or carry forward, the work already done. Reality is often very different.

Professor Muetzelfeldt argues that there is an approximate evolutionary hierarchy in modelling, and I have been comfortably dozing roughly half way up it. My own interpretation of it runs something like this.

At the bottom, in the primordial slime, so to speak, is the home-coded ad hoc program. Such a program carries out the task or tasks for which it was designed, but is almost devoid of value beyond that. Reading it is difficult and reveals only what was done, not why. The first step upward from there was structured, then object-oriented, programming. This was a great improvement, but still remained at root an executive tool rather than a conceptual one. Reading someone else's model still required determined work which made critical comparison rarer than it ought to have been. Advances in computer-aided software engineering (CASE) methods then helped to reinforce modularity and encouraged an increased degree of abstraction.

Though not mentioned in Professor Muetzelfeldt's taxonomy, computer algebra packages were a great advance on pure languages: I've spent many happy days modelling in Maple, Mathcad and Mathematica. Each of them makes noteworthy strides forward in one or more ways – Mathcad, for example, being noteworthy for its emphasis on contextual documentation, and all of them allowing the model to take on an organised 'shape'. Mathematical notations are also inherently more abstract than imperative procedural computer code. Nevertheless, there is still both a gap between conceptual and representational and a blurred line between conception and execution.

The exemplar SIMILE implementation of a blank hexagonal modelled world canvas generator (example in background) illustrating how complexity of terrain can be modelled simply using the single submodel technique.

The exemplar SIMILE implementation of a blank hexagonal modelled world canvas generator (example in background) illustrating how complexity of terrain can be modelled simply using the single submodel technique. Next up (Professor Muetzelfeldt allocates this to level four; I've pulled it back to position three) came software such as Matlab or Scilab, specifically designed to hold, represent and manipulate numerous matrices of significant size, with well developed simulation routines and modules. These could replace my cardboard panels with a virtual analogue; conversion of the hexagonal ones to rectangular arrays with polar coordinates is a trivial matter (though some intuitive visual clarity is lost) and the programmable calculator is replaced by scripts. Sysquake, using a closely compatible language, adds an interface designed from the ground up to support exploratory simulation, its graphics control positively encouraging such mechanisms as slider inputs on the same panel as output summaries. An added advantage is that useful portions of a model can be taken into the field, or built there and brought home for incorporation, using such products as MtrxCal (small, user-friendly, ideally suited to Matlab/Scilab users), PDAcalc Matrix (a module within a general calculator) or Lyme (an almost complete port of SysQuake's own mathematical engine). Some determination is still required for anyone else who tries to use or build upon what I've done. Documentation has to be stored either in an equivalent of my old ring binder (usually a text file or window) or as verbose commenting of script code. Exploratory 'playing around' with my structures is less easy than it could be, for similar reasons. Comfortable and productive though I am with this true descendent of my cardboard panels, it has its limitations.

At level four come component-based integrated modelling frameworks. These have many of the same characteristics as the script-driven matrix approach, if only in the same way as a mouse shares the same mammalian characteristics as a whale.

Moving away from the imperative procedural to a declarative basis, level five brings visual modelling in CASE style environments such as Modelmaker, Powersim, STELLA, Vensim, and so on. Restricted palettes of Lego-like components build into visual models akin to cognitive maps. The shift from procedural to declarative encourages a design process approach, conceiving the model before taking or defining actions. This is perhaps modelling's equivalent of the agrarian revolution: shifting from a hand-to-mouth hunter-gatherer outlook to a strategic, future-based approach. In the human mind, models are held as ideographic concept blocks and the relationships between them, and not as instruction sequences; declarative modelling echoes that fact within the machine implementation. These products are all excellent places to be; I've done a lot of useful collaborative work in STELLA.

Thanks to the advent of UML (Unified Modelling Language, from the Object Modelling Group consortium) there is now a high degree of consistency in methods, notation and graphic representation at this level. That's important for many obvious reasons, but in particular it favours the next evolutionary step: opening up the model to distributed development and implementation.

Running a model once it has been built is, of course, important, but doing it in the same environment is not. The design and execution phases have been conceptually divorced at level five, but they typically remain bound together by a proprietary format. At the point of generation, this may not seem to matter; I have the package in which I did the development, and have saved the results in its format, so running it there seems the obvious thing to do. But there are two drawbacks. The first comes when I want to pass my work around, have it tested by others, and compare it with work done elsewhere. The second drawback is that my model in proprietary format can only be used within the range of tools provided by the package which saved it.

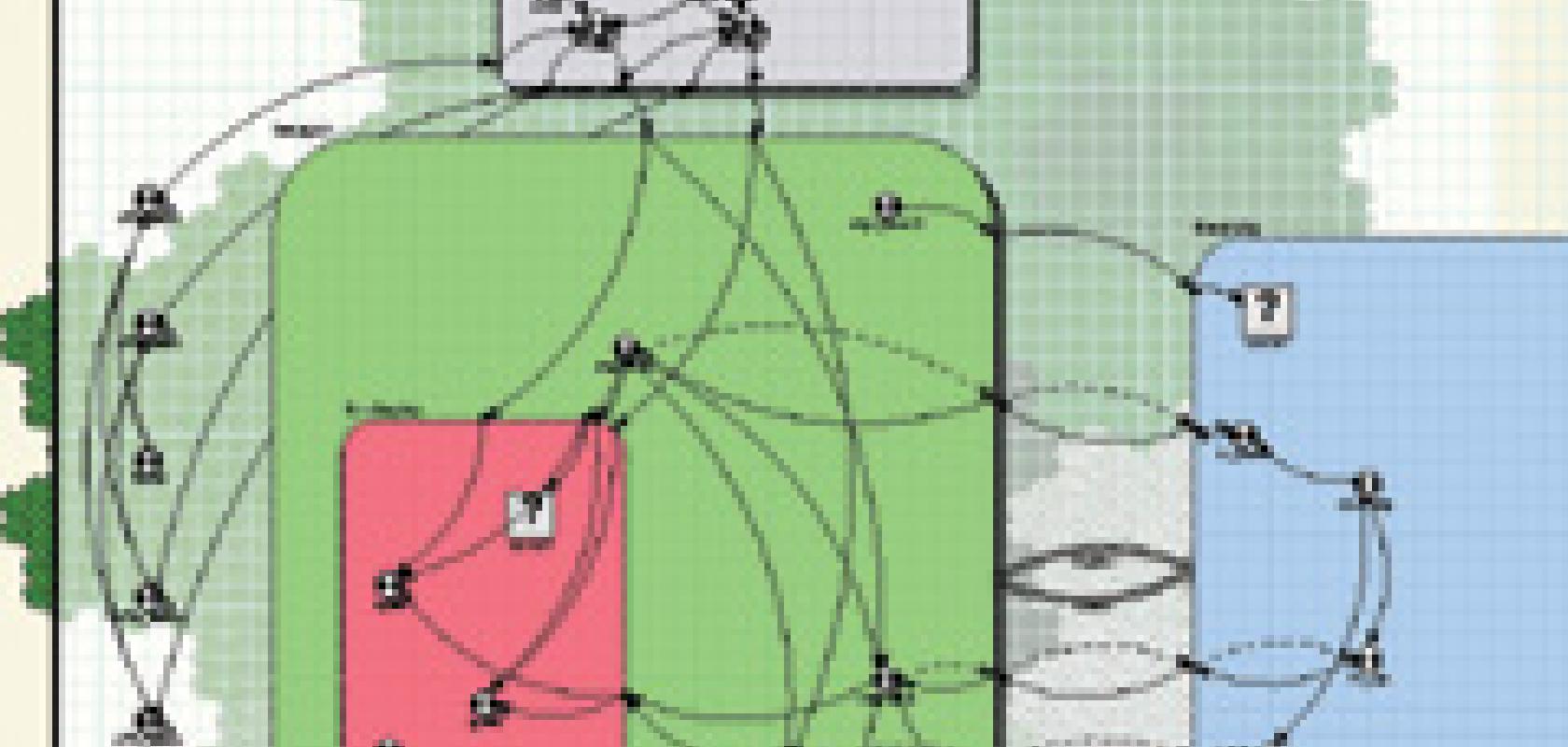

A new ekistic model is begun in SIMILE. The basic multilevel submodel structure has been sketched out, broad-brush, and the first details added.

A new ekistic model is begun in SIMILE. The basic multilevel submodel structure has been sketched out, broad-brush, and the first details added. The solution, evolutionary level six, is obvious in principle once stated: to separate the design and execution phases completely and store the first in open formats. The exact form in which the built model is saved doesn't, in principle, matter so long as it is open, rich enough to support the necessary range of content, and symbolically accessible. In practice, though, if it is to catch on it should be in a format that has already been established. Professor Muetzelfeldt favours XML, and this makes a lot of sense in many ways as the web expands machine semantic interlinkedness.

Different groups using the same storage format allow the potential for interaction to rise combinatorially. Uses to which the model may be put are no longer limited to the original objectives of those who built it; the means by which it may be interrogated no longer restricted to those provided in the generating package; the potential for comparison, interaction, modification and review hugely expanded. As the model base grows, so will the available tools and, in a positive feedback loop, the number of users. After a critical point, there is a new de facto standard. So runs the theory, anyway; and I'm a pragmatic, hands-on model builder and user for practical ends, not a computer scientist or AI expert, but over the past months I confess to having been convinced in principle.

Getting down to the nitty gritty, I put SIMILE to practical use on four groups of models: tutorial examples, archived work, small current projects, and the beginnings of a large-scale cooperative task. The building of models is straightforward and suitably Lego-like, dropping flows, containers, variables and influences dropped into a quadrille sheet. Anyone who has used other current generation modelling products I've mentioned will be right at home and working productively from the word go, though the efficiently designed tutorial is, nevertheless, time well invested.

The tutorial starts from first principles, with a simple bank account accumulating interest as the first example. Executing this provides a curve rising cheerily towards infinity; adding new components to produce something more depressingly similar to my own bank account took only a few clicks and a couple of seconds before moving on to other, more interesting territory.

Harking back to the use of Atebion last time, I was particularly interested in the modified predator/prey example. Already more realistic than the pure classic form, this proved almost trivially amenable to stochasticisation using the built in random number generators rand_const() and rand_var(). As a nice touch, illustrative of the overall quality and attention to detail, old models which contain a now obsolete rand() function are intelligently interpreted depending on usage.

Another example develops the Lego principle to submodelling: instances of one platonic tree, seeding or dying at different times and locations, generate a dynamic time-lapse woodland. This one passed my personal 'year-six test' for clarity and accessibility of software, capturing the interest of, and being understood by, 10-year-old children who then built simple models of their own. The trees provide a first glimpse of how the Lego principle can be applied in a big way – or perhaps I should really say a small way, since it effectively reduces a structure of any size to the specification of a single brick and the rules for its replication.

A simple model (a population pyramid) showing SysQuake's exploratory enviroment with input sliders and graphical output.

A simple model (a population pyramid) showing SysQuake's exploratory enviroment with input sliders and graphical output. Built on that same principle is the hexagonal grid model. Anyone who has ever modelled geospatial movement needs no explanation of that: hexagonal tessellation provides a much more sophisticated and realistic representation of two-dimensional relations than a square chessboard arrangement for only a modest increase in handling complexity. The implementation here is taken from the HOOFS framework, but can be applied to anything from ants (there's an ant model on the SIMILE site) to an urban guerrilla engagement or the spread of bird 'flu. The full gamut of layered 'boards' is produced by replicating a single archetype hexagon submodel at different geospatial coordinates and definitional levels. The presence of a sublayer beneath any given hexagon is denoted by a parent/child flag, so detail can be included, omitted or added later as required without disturbing the structure. This ability to switch detail on or off at will is essential for a fully flexible ekistic model: ants, guerrillas or birds will not be active in the same place tomorrow or next week as they are now, and the modelling sparseness or density distribution must reflect the fluidity without requiring major reconstruction. I regularly have to model agricultural pyramid networks (usage, wild and domesticated animal populations, ownership, irrigation and geography, arable conflicts, and so on), and the flexible power here makes it a dream.

Alongside such underpinning models, and available for download, are 'simile helper' files, extending the Lego principle with add-in expansions of the available display options. The option to write these is available, and Simulistics offer to help – though I've not tried either option, the helpers already available work well.

Ability to easily absorb rectangular matrices as variables didn't, at first, seem to be on the menu, but a little thought showed the limitation to be in how I viewed data objects. Some of my matrices are straightforward data arrays, best viewed as tables either stored or (usually more efficient) initialised from an external CSV 'scenario' file. Others are pairwise relational, translating into SIMILE as sets of one-to-one correspondences between submodels.

Flow values can entered in a variety of ways. The simplest is just to type in a value or an equation, but sliders are a more intuitive option. For a set of Cartesian data pairs (another matrix type) the sketch graph is more intuitive still: a grid is labelled with maximum and minimum values, an initially flat line is dragged into shape, and abracadabra – the daily activity cycle of an ant, the progressive intensity levels pattern for a riot, or whatever, is in place.

There's a lot of power on which I haven't touched here, and more still that I haven't explored yet. Does SIMILE represent my messy ekistic models as well as the matrix structures with which I've been happy so far? Yes; once I'd gotten my head around new ways of looking at the models, it does – better and more elegantly, with a clearer view of what's going on. In complex situations it does require more self discipline: slapdash linkages invisible in a matrix algebra package are suddenly revealed as a wire wool pot scourer in a graphic environment, shaming me into a little extra time spent on submodels. Three months with SIMILE has left me much more inclined to practise all those good habits that I constantly preach to my students, and made me more productive when collaborating in other products with similar environments.

Harking back to Professor Muetzelfeldt's arguments on decoupling model construction from execution, I have already discovered the portability advantages of carrying a C++ or PROLOG version of the model with me – neither will run on my Palm machine, but they are easily carried on it, or on a USB flash drive, for demonstration on machines without SIMILE but carrying the necessary compiler or interpreter. One colleague in the Middle East has already extracted a lot of value from my SIMILE models using her own PROLOG tools. Simulastics have an XML Schema for representing SIMILE models with a balance of human readability and machine access efficiency too – though I've not gotten as far as using it, yet. Then again, the free evaluation version of SIMILE can be carried (or sent) with a model, and does everything the standard version does provided that the model remains under the size limit.

In principle, I think it likely that my shift onto a new and more virtuous path is permanent. On the other hand, I still do a lot of work in the field and don't think I can give up the convenience and value of being able to carry model fragments where a laptop cannot go. The extent to which I can evolve ways of using scenario files to integrate portable elements with SIMILE base models will decide the degree to which old habits are abandoned. If I could always be sure of a machine capable of running SIMILE (as most people can), I wouldn't look back; perhaps one day a stripped-down version of the software will be available on a hand-held computing platform.

Sources

| Product | Source | Contact |

| Atebion | Atebit | www.atebit.co.uk |

| Lego | The Lego Group | www.lego.com |

| Lyme | Calerga | info@calerga.com |

| Maple | MapleSoft | info@maplesoft.com |

| MathCAD | Mathsoft | sales-info@mathsoft.com |

| Mathematica | Wolfram | info@wolfram.co.uk |

| Matlab | Mathworks | www.mathworks.co.uk |

| Meccano | NIKKO | sales@nikko-toys.co.uk |

| ModelMaker | ModelKinetix | enquiries@modelkinetix.com |

| MtrxCal | ADACS | evert@adacs.com |

| OMG | Object Modelling Group | www.omg.org |

| PDAcalc Matrix | ADACS | evert@adacs.com |

| Powersim | Powersim Software | powersim@powersim.co.uk |

| Scilab | SciLab matrix software | Scilab@inria.fr |

| SIMILE | Simulistics | info@simulistics.com |

| STELLA | ISEE Systems | info@iseesystems.com |

| Sysquake | Calerga | info@calerga.com |

| Unified Modelling Language | OMG | www.uml.org |

| Vensim | Ventana Systems | vensim@vensim.com |

References

Gaarder, J., Sophie's World : a novel about the history of philosophy. 1995, London: Phoenix. ix, 394p.

Grant, F., A Model Miscellany, in Scientific Computing World. 2006, Europa Science.

Doxiadis, C.A., Ekistics, An Introduction to the Science of Human Settlements. 1968, London: Hutchinson. 528.

Morris, N., Endangered Engineers. Scientific Computing World, 2006(Feb/Mar 2006): p. 22-23.

Morris, N., Chemical culture. Scientific Computing World, 2006 (Feb/Mar 2006): p. 14-16.

Beecham, J.A., S.P. Oom, and C.P.D. Birch, HOOFS – a Multi-scale, Agent-based Simulation Framework for Studying the Impact of Grazing Animals on the Environment. 2002, Aberdeen: Macaulay Land Use Research Institute.