Autonomous systems are racing ahead, with strides being made in everything from logistics to agriculture, manufacturing and goods delivery systems.

The automotive industry also reached a major milestone recently with the introduction of the first Level 3 (L3) autonomous cars to the mass market. From L0 to L5, there are six levels of automated driving. L3 vehicles are capable of conditional automation where the vehicle has environmental detection capabilities and can perform most driving tasks, but human override is still required.

Such breakthroughs would not be possible without modelling and simulation, as Gilles Gallee, Director of Autonomous Vehicle Simulation Solutions at Ansys, explains: “Autonomous Driving (AD) development is complex, but validation of such technology in a mass-market product such as a passenger car is even more complex. Simulation is a must to prove the safety of AD, so industry players (OEMs and Tiers) can implement solid workflows and new methodologies while also complying with ISO standards.”

Ansys works across the automotive supply chain, including with car manufacturers (OEMs), system suppliers (Tier 1), component manufacturers (Tier 2) and parts suppliers (Tier 3). The company implements workflows with model-based systems’ engineering tools and methods, linked to the automotive company’s internal digital transformation efforts.

Gallee adds: “Such methodology focuses on creating, maintaining, and exploiting models as the primary means of systems analysis and engineering collaboration between different disciplines.

“We apply these principles to the triumvirate of safety by design, safety by verification and validation, and the delivery of incremental safety cases by combining simulation with analysis and scenario at scale for L3 autonomous functions.”

Ansys launched a new product line called AVxcelerate in 2021 to further advance this work. Using AVxcelerate, OEMs and Tiers can combine simulation with statistics and scenario analysis at scale in the cloud. AVxcelerate can also produce a range of safety analyses to prove the safety case when a vehicle or system is going through the homologation process.

For any Autonomous Vehicle (AV) or Advanced Driver Assistance System (ADAS) to operate, they must also be able to detect and measure their environment. To achieve this, a vehicle needs a range of sensors to replace or entirely augment the role of a human driver.

Royston Jones, Global Head of Automotive at Altair, explains: “Operating the vehicle with less, or even without, human interaction can be achieved based on the feedback provided by various types of radar, lidar, camera, ultrasonic, wireless and navigation sensors. Such feedback determines exact positioning in response to high-resolution maps.”

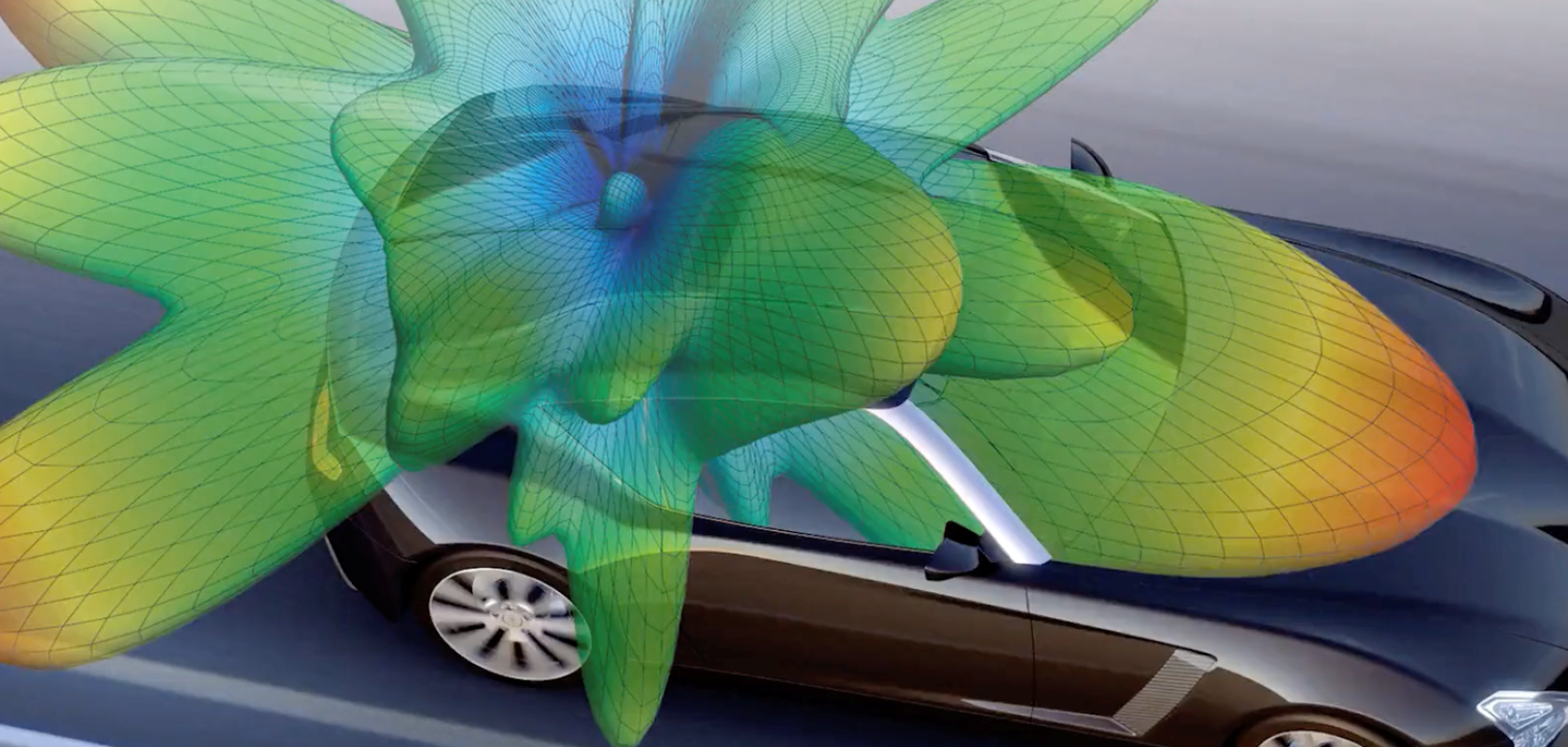

Altair’s advanced simulation solutions enable the development and design of such sensors to enhance the performance of these autonomous systems and ensure their integration within a vehicle. For example, when validating the performance of an antenna placed in a vehicle’s bumper, electromagnetic simulations are performed to determine the exact mounting position of a radar sensor along with surrounding material layers.

Jones explains: “This includes the evaluation of possible bore sight and bearing errors, as well as dynamic vibration and so-called virtual-drive tests producing radar/Doppler heat maps for checking how the potential objects with different radar cross sections can be identified.”

Altair Feko, which is a computational electromagnetics tool, is routinely used to simulate radar sensor design as well as integration aspects such as radome and bumper effects.

Jones adds: “Altair also offers solutions for ultrasonic sensors, wireless sensors, navigation/positioning, and lidar modelling. Moreover, based on our portfolio in data analytics, the sensor data fusion that combines feedback from multiple sensors can be leveraged to optimise the algorithms for car behaviour.”

However, such autonomous innovations increase vehicle complexity. This puts further pressure on an overstretched design and development lifecycle, where engineers are expected to meet tight deadlines despite mounting product complexity. This is where modelling and simulation can help, as Jones explains: “Exploring all the required scenarios within shorter product development cycles requires advanced simulation and the application of high-performance computing (HPC).”

“One challenge is to ensure the best sensor performance in the field, which requires continuous testing with prototypes. As availability of such prototypes is increasingly scarce due to shortened development cycles, we developed software solutions – digital twins – and so-called virtual-drive tests (VDT).”

To meet the demands of automotive players, Ansys has been implementing a long-term ML/AI strategy to shorten the development times of autonomous systems, while widening its autonomous capabilities. Gallee says: “Current L3 systems are limited in terms of ODD (operational domain design). OEMs are now engaging in a new race to extend the functions offered to the driver.

“This will require higher capabilities in the car in terms of Artificial Intelligence, higher computation power with a better power consummation ratio, more sensors, and sensors with higher resolution, such as 4D imaging radar or Lidar.”

Sensors are also advancing in terms of the information they can gather. When it comes to radar-based systems, traditional systems are designed to scan the roadway across the horizontal plane and identify the ‘three Ds’ of an object: distance, direction, and relative velocity (Doppler).

Now, newer 4D imaging radar systems are utilising another dimension: vertical information. Jones explains: “We are working with our customers to enhance the radar sensors in this direction. With this, the 4D radar sensor performance can be evaluated with consideration to sensors that involve elements that are arrayed both horizontally and vertically.

“This helps especially for critical (safety relevant) scenarios such as pedestrian crossings, bus stations, bridge underpasses, and tunnel entrances.”

AI, Robot

The automotive industry is just one of the many industries now developing and deploying autonomous systems. Amit Goel, Director of Product Management for Edge AI and Robotics at Nvidia, says: “We are seeing a huge growth in Autonomous Mobile Robots (AMRs) in manufacturing and warehousing, robotic manipulation for pick-and-place palletisation flows, inventory management with computer vision for shelf scanning, and industrial safety applications.

“There are autonomous machines in retail applications for floor-cleaning AMRs, self-checkout, and more. In agriculture, we have seen a huge adoption of autonomous machines for smart tractors for harvesting, weeding and crop monitoring, for example.”

Nvidia works with roboticists and developers, providing them with hardware and software toolkits to build autonomous machines across a range of sectors and use cases. The company’s approach to developing these robots is covered by four steps.

The first step is training the robots, as Goel explains. “Nvidia’s GPU and AI offerings enable the rapid training of computer vision models with multi-camera, multi-sensor and 3D perception. Nvidia’s tools such as Synthetic Data Generation, TAO toolkit, and pre-trained models, make it easy to bring the best AI training workflow to equip the robotics and automation industry with advanced perception, motion planning, and AI.”

The second step is simulating the robots using the Nvidia Isaac Sim robotics simulation application tool, which provides photorealistic, physically accurate virtual environments.

The third step is building the robot. Here, Nvidia Jetson is coupled with the Isaac ROS (Robot Operating System) SDK to improve the AI and computer vision functionality on the robot itself, enabling robot companies to leverage advanced AI to improve the robot’s performance.

Lastly, the robots must be managed at scale. “Nvidia offers remote management tools and route optimisation software that makes robots collaboratively work together with minimum downtime.” Goel adds.

But challenges remain for autonomous systems across every industry. Manufacturing service provider Industrial Next, for example, works in the design and development of semi-autonomous and fully-autonomous cars and trucks. Lukas Pankau, co-CEO of Industrial Next, says: “The manufacturing environment is challenging for sensors, due to poor lighting conditions, debris, moving parts, electrical interference and poor connectivity. We are using computational and technological methods to overcome these challenges – clever computer vision advancements can improve images that the naked eye can’t see.”

Integrating legacy systems is another major challenge, according to Pankau: “whether it is the loss of institutional knowledge or entrenched, outdated processes, we have to be able to work with existing systems before we can prove our systems are better.

“This further impacts our ability to simulate and model, as we have to develop from the ground-up smart systems that can collect enough data for us to make an impact. We are working on integrating all of our autonomous systems so they can operate and collect data on their own, while still feeding into a greater common digital twin of the manufacturing process,” Pankau adds.

Edge computing is helping Industrial Next to boost the capabilities of its autonomous systems. Pankau explains: “Algorithmic improvements can better utilise computation power on our edge computing modules. Over-the-air updates allow us to push better algorithms and improved AI to our edge computing modules. And all of this can be validated using a simulation environment built using actual data collected through our sensors.”

But training and testing autonomous systems for “longer tail environmental variables, object variation, and lighting conditions” still presents challenges, according to Goel. “In the past, robots were only functional for a single highly-defined task that required direct programming, such as robotic welding.”

An agricultural robot or a robot arm, for example, may need to pick up different coloured boxes from a pallet, where each object is a different size, colour and weight.

“In order to program a robot with longer behaviour trees to interact with more object variation, training in a virtual world like Isaac Sim means these different image datasets can be created for training, different scenarios can be tested in virtual to verify the robot’s programming, and the outputted software can be deployed on hardware designed for real-time computer vision,” Goel explains.

The end-to-end Isaac robotics platform on Nvidia Omniverse provides advanced AI, powerful simulation software, and accelerated computing capabilities, with corresponding hardware from the Jetson family to the entire robotics ecosystem. “More than 1,000 companies rely on one or many parts of Nvidia Isaac, including companies that deploy physical robots developed and tested in the virtual world using Isaac Sim,” Goel adds.

Amazon Robotics, for example, uses Nvidia Omniverse Enterprise and Isaac Sim to simulate warehouse design and train robots with synthetic data generation using the Isaac Replicator tool. This helps the company “gain operational efficiencies before physically implementing them in warehouses,” according to Goel. “This helps the e-commerce giant fulfil thousands of orders in a cost- and time-efficient manner.”

Deutsche Bahn is training AI models to improve safety to handle important but unexpected corner cases that rarely happen in the real world, such as luggage falling on a train track.

Soft Robotics is also harnessing Nvidia Isaac Sim to help close the sim-to-real gap for robotic gripping applications. Food packaging and processing companies such as Tyson Foods are using the startup’s mGripAI system, which combines soft grasping with 3D vision and AI to grasp delicate foods such as proteins, produce and bakery items without damage.

Aeronautics and defence are also major areas of interest for Ansys. Gallee adds: “We are engaged in various drones and aircraft programs that are combining AI, sensors, and critical embedded software with a Digital Mission Engineering approach. Projects with Embraer and Airbus Defence and Space provide more exciting insights into the very latest on this evolving space for autonomous systems.”

Industrial Next is now fine-tuning its autonomous motion planning algorithms to adjust to many different parts. “This can be applied to several industrial problems in assembly and logistics. The next evolution for us from moving parts will be using the same technology to do assembly and installation,” Pankau adds.

“With the improvement of our core technology of computer vision, motion planning, and robotic control, we will focus on data connectivity and systems-level improvements. A holistic approach to the manufacturing process will allow us to find improvements in product design that will cascade down into improvements on the factory floor. This constant feedback from our autonomous systems on the factory floor to the product design will create a virtuous cycle similar to what we did for the Model 3 production at Tesla.”

Industrial Next understands how challenging it is to achieve a fully autonomous factory, or “alien dreadnought” as Elon Musk sometimes calls it. “It requires a first principle, holistic approach that can be difficult for those with existing facilities and sunk costs,” Pankau explains.

“This future of an autonomous factory isn’t just to make things without human labour, it will allow new product innovations and customisation that today we can only get through low-volume expert craftsmanship. A holistic autonomous factory will allow product improvements and customisation to occur at the speed and efficiency of mass production”

Instead, humans will work alongside these autonomous systems as designers, creatives, and AI trainers, according to Pankau, who concludes: “Humans will always outperform AI at certain tasks, but working together in an autonomous system will allow the system to work better and humans to have more rewarding work, changing the face of manufacturing forever.”