When the results of an abstract, intractable, or complicated system are visualised successfully, the results can make for some of the most engaging aspects of HPC today. Dr Lakshmi Sastry is a senior software engineer at the UK’s Science and Technology Facilities Council (STFC). Among her many interests, Dr Sastry works on optimising HPC data-processing for users of the STFC’s Isis neutron spectroscopy facility, and the Diamond Light Source synchrotron. ‘One of the results of high performance computing is extremely large amounts of data,’ she explains. ‘We’re talking about many gigabytes of data, and hundreds of megabytes per hour. This could come from simulations or observed data.’

Facilities such as Diamond and Isis are expensive: ‘When researchers are conducting experiments, they want to look at the data and see whether or not the experimental set-up is good, and to see whether they’re producing useful data,’ says Sastry. ‘Up until very recently, they would pay lots of money for a day at the facility, and then take the data back to their organisation, and only then find out if the work was useful. We’re now moving towards real time analysis and visualisation data,’ Sastry says, adding that researchers may also run simulations alongside their experiments to compare generated results to those they expect. A degree of in-line, real-time fine tuning is now possible.

Modelling the heart

Sastry’s techniques allow researchers to use computationally demanding visualisation algorithms without having their own on-site access to the expensive HPC equipment necessary. Sastry believes that the approach could have revolutionary applications in a clinical diagnostic setting – an area of medical science that has seldom taken advantage of HPC. A project called Integrated Biology, recently completed by the team, examined ways of improving the prognosis for heart attack patients through more accurate diagnosis of the extent of their heart attack. ‘Some people suffer a heart attack and go through treatment, and then make a full recovery, but some people pass away after suffering the first heart attack. The differences often depend on which parts of the heart tissue are damaged. Given that treatment is very uniform from outside [the body], it can actually miss [the affected area] – if, for example, the doctor tries to inject adrenaline, but it is injected into the ischemic tissue [the parts that have died due to lack of oxygen], it is not going to have a good effect.’

A group at Oxford University has created an anatomically correct mathematical model of the human heart, with a view to better understanding where these ischemic areas are likely to be after a heart attack. ‘If they then run the model on the best supercomputer they have, using 2,000 processors, for 24 hours, they get seven seconds of simulation data,’ says Sastry, adding that this is insufficient to gain an understanding of the processes involved. Furthermore, supercomputing resources are limited, meaning that this approach might not allow the opportunity to adapt the simulation: ‘A big part of the project money would be used up, and it might not even give you any new information.’

Computational steering techniques, using snapshots taken at intermediate times, allow the researchers to check that the simulation is proceeding in the correct direction, ensuring that computer resources are not wasted. ‘Computational steering is best achieved with 3D visualisation,’ states Sastry. ‘Again, the data produced is in very large quantities, and so real-time visualisation using a single machine is not possible.’

The ultimate aim of the Integrated Biology project is to provide an accurate, mathematical model of how a healthy heart functions. Failing hearts can then be compared to this model in order to provide clinicians with an idea of where the damage is, and how best to treat it. A grid service would allow the demanding computation to be done wherever the resources exist, meaning that HPC facilities need not be co-located with the point of care.

The computing resources required are significant: SCFC, alongside Edinburgh University, runs the UK’s Blue Gene and HPCX supercomputers and is also one of the UK National Grid Service’s (NGS) nodes. In addition, Dr Sastry uses a powerful dedicated visualisation cluster, optimised for handling very large amounts of data through the use of InfiniBand interconnects. Scientists wishing to use the visualisation capacity offered connect via the grid, and the computational workload is distributed across the visualisation cluster and other resources as available. ‘In order to do this, we partition the data and farm out the processing to different nodes. It’s then brought back together, and it creates a full image. We build-in the intelligence to stitch all of the images back together dynamically, in the correct way.’ The resulting visualisation data is then sent back to the scientists running the application.

Lost worlds

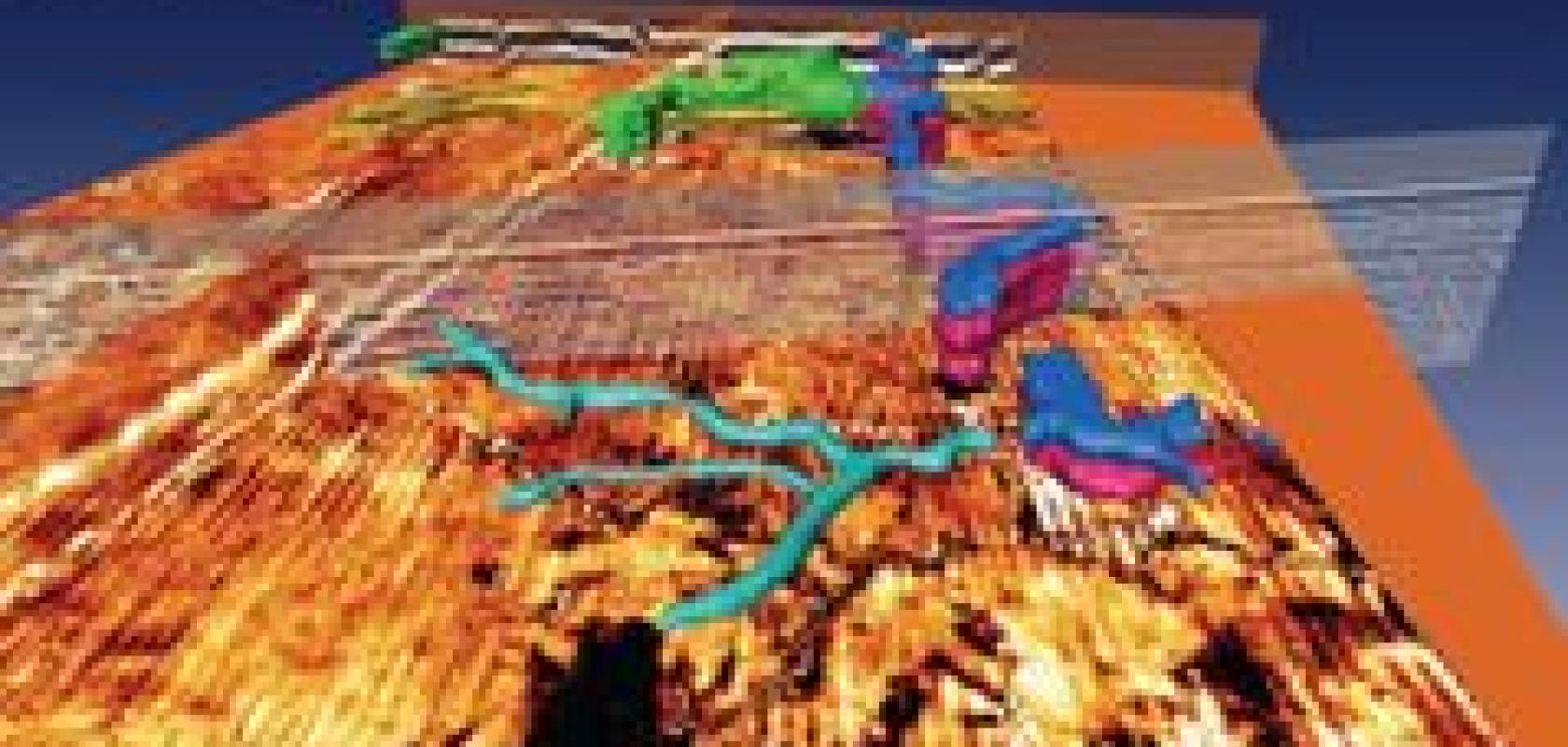

Visualisation techniques are often the only way for researchers to gain an understanding of real-world scans. It would be nearly impossible for oil prospectors to identify the regions they’re looking for by examining raw seismological data, and so information from seismic surveys is turned into a 3D map of an area’s geology. As a result of these accessible, intuitive visualisations, the tried and tested surveying techniques of petrochemical prospectors have found new applications in other disciplines, including archaeology. The IBM Visual and Spatial Technology Centre (VISTA) at the University of Birmingham, UK, has used HPC-driven visualisation to uncover details of an area of land that was reclaimed by the North Sea more than 10,000 years ago. Seismic data, produced by Petroleum Geo-Services (PGS), was analysed by the Birmingham group using Avizo visualisation software, produced by VSG. Daniel Lichau, a product expert at VSG, explains that the group used the data to conduct an extensive sub-surface survey of the ground underneath the North Sea. Again, hundreds of gigabytes of data had to be processed, necessitating an HPC-driven approach.

‘The first use for Avizo is to inspect and browse very large data sets,’ says Lichau. ‘The technology to achieve this is based on a multi-resolution approach, allowing the user to display data progressively, which may not fit on memory storage. The Avizo software manages data between disk memory and the graphics board in order to bring a visualisation to the user.’ In addition, the software allowed the group to label parts of the data, such as an ancient riverbed running through the area under investigation. In this way, archaeologists at the university were able to identify areas of the sea floor that were once inhabited by Mesolithic people.

Both Avizo and the seismic surveying techniques used were developed with oil and gas prospecting in mind, but archaeology has been able to take advantage of these powerful tools, with some fascinating results.

Peeling statues

In a similar way, while computerised X-ray tomography (CT scanning) has become an invaluable tool in clinical medicine, its applications are not limited to medicine. Researchers at the Department of Physics, of the University of Bologna, Italy, have used HPC-driven visualisation to analyse CT data in order to investigate hidden aspects of several ancient works of art, with a view to better preservation of the artefacts. The CT technique is not new, but Professor Franco Casali and his group have developed innovative methods of analysing the data produced – techniques specifically suitable for studying large works of art. The team explains that one particular analysis of a two-metre high wooden Japanese statue (known as a Kongo¯rikishi) generated more than 24,000 individual radiographic images, amounting to 120GB of data.

Prior to the adoption of an HPC solution, processing the images of the Kongorikishi took up to two months using a single dualcore machine running Windows XP and the group’s C++ based software. The researchers were able to achieve resolution better than the standard in medicine, but they added that processing times of this length negated the usefulness of the analysis, especially considering that researchers have to travel to the art to take these scans. The university therefore needed a system that was capable of dealing with the complex algorithms involved, at much higher speeds, and capable of doing so wherever the artefacts were located. Having already written its algorithms, the university also wanted a solution that did not require their developers to re-write the software.

HPC-driven visualisation has been used to analyse ancient works of art, such as this Kongorikishi statue. By switching to a cluster running Microsoft’s Windows HPC Server 2008, the team at the University of Bologna’s Department of Physics increased data processing performance by a factor of 75.

Vince Mendillo, director of HPC at Microsoft, recalls the difficulties of the application: ‘The tomographic images they were making essentially used a frequency sensor’s input, cut into slices; it’s a very complex approach. Their requirements were to employ something that was capable of large sweeps of analysis, and able to process large quantities of data.’ The department now conducts its calculations on a four-node, eight-core cluster, running the Windows HPC Server 2008 operating system. ‘We’re seeing Windows HPC Server applied in some cutting-edge areas,’ says Mendillo. ‘The group experienced a speedup in performance of a factor of 75 when they switched to a fairly modestly-sized cluster configuration running Windows HPC Server, and they didn’t have to re-write their code; it is portable between the Windows client, the server, and our future Cloud versions.’

The benefits of the increased visualisation capacity are comparable to those offered by Sastry’s work, in that it allows the researchers to make the most of their time through real-time analysis of their work. Mendillo states that the performance of the new system exceeded the group’s expectations. ‘We have a key focus on working with academics around the world, both through Microsoft Research and through the business group, and it’s great to see such rapid adoption of our technology. There are new and interesting things happening every day.’

Working together

Many HPC vendors attending the Supercomputing (SC) and International Supercomputing (ISC) exhibitions bring some demonstration machines along with them. So much computing power under one roof allows attendees to experience HPC visualisation for themselves. Mellanox builds the SciNet demonstration cluster annually at SC, and the ISCNet at ISC. The clusters are based on the combined HPC capacity of 20-25 different organisations that are exhibiting at the show, with each stand’s hardware added to the cluster via an InfiniBand 120Gb/s network. Gilad Shainer, director of HPC and technical marketing at Mellanox Technologies, describes SciNet at SC’09: ‘Every time we do this, we try to bring something that has a lot of visualisation in the demonstration – it should be fun to see the demonstration, rather than just being about the technology.’

Shainer explains that the cluster was organised in two parts: ‘One server in every location served as a visualisation node, which rendered data and put it on a screen. The rest of the servers worked as a [single] rendering farm, rendering the application before sending the data to the visualisation nodes. Each of the servers was loaded with GPUs,’ he says, adding that due to the heterogeneity of the servers involved, the cluster included both Nvidia and ATI GPUs. ‘This may be the first time that a mix of ATI and Nvidia hardware has been used for a single application,’ says Shainer.

The demonstration at SC’09 was an immersive 3D visualisation of a car, based on models supplied by Peugeot. Observers were able to move around the car, and the image would adjust accordingly based on sensors attached to the 3D glasses used. Shainer states that the 120Gb/s InfiniBand (the first time, globally, that the technology has been pushed to this speed) was necessary in order to reduce latency to the point that there was no noticeable delay between an observer moving his or her head and the 3D image being updated accordingly.

Mellanox is already planning the demonstration for the ISC’10 exhibition, to be held in Hamburg, Germany. An Egyptian pyramid will be modelled, as it was when it was first built. ‘We bring the same impressive visualisations to the show floor, and we’ll be using 120Gb/s InfiniBand again, but we’ll also demonstrate a new technology that Mellanox is working on with Nvidia, called GPU Direct. It’s a development to reduce the GPU-to-GPU communication overheads. Currently, communication between GPUs (in a rendering farm for example) requires CPU cycles, even though InfiniBand is used to connect servers. The CPU currently has to be between the GPU and the InfiniBand during GPU communication. Nvidia has managed to take the CPU out of the loop, and reduced 30 per cent of the communication time, more or less.’