Researchers from IBM research have created a new AI chip aimed at deep learning inference.

IBM Research has been investigating ways to reinvent the way that AI is computed. Analog in-memory computing, or simply analog AI, is a promising approach to address the challenge by borrowing key features of how neural networks run in biological brains. In our brains, and those of many other animals, the strength of synapses (which are the “weights” in this case) determine communication between neurons. For analog AI systems, we store these synaptic weights locally in the conductance values of nanoscale resistive memory devices such as phase-change memory (PCM) and perform multiply-accumulate (MAC) operations, the dominant compute operation in DNNs by exploiting circuit laws and mitigating the need to constantly send data between memory and processor.

To turn the concept of analog AI into a reality, two key challenges need to be overcome: These memory arrays need to be able to compute with a level of precision on par with existing digital systems, and they need to be able to interface seamlessly with other digital compute units, as well as a digital communication fabric on the analog AI chip.

In a paper published in Nature Electronics, IBM Research made a significant step towards addressing these challenges by introducing a state-of-the-art, mixed-signal analog AI chip for running a variety of DNN inference tasks. It’s the first analog chip that has been tested to be as adept at computer vision AI tasks as digital counterparts, while being considerably more energy efficient.

The paper explains that Analogue in-memory computing (AIMC) can help to reduce the energy costs of AI computing. "Analogue in-memory computing (AIMC) with resistive memory devices could reduce the latency and energy consumption of deep neural network inference tasks by directly performing computations within memory. However, to achieve end-to-end improvements in latency and energy consumption, AIMC must be combined with on-chip digital operations and on-chip communication."

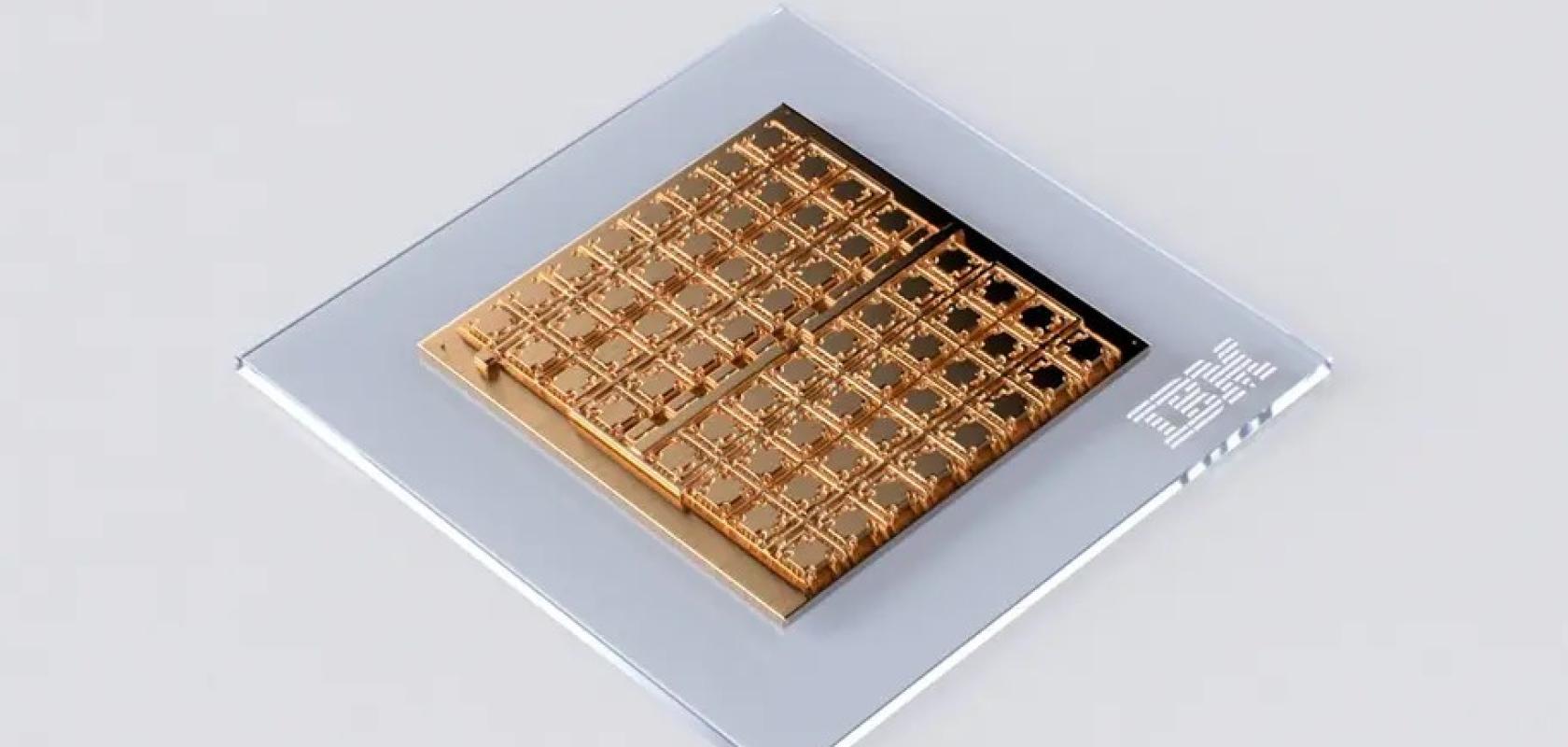

The paper reports that the team has succesfully desinged and created a multicore AIMC chip designed and fabricated in 14 nm complementary metal–oxide–semiconductor technology with backend-integrated phase-change memory. The fully integrated chip features 64 AIMC cores interconnected via an on-chip communication network. It also implements the digital activation functions and additional processing involved in individual convolutional layers and long short-term memory units. With this approach, we demonstrate near-software-equivalent inference accuracy with ResNet and long short-term memory networks, while implementing all the computations associated with the weight layers and the activation functions on the chip.

The chip was fabricated in IBM’s Albany NanoTech Complex, and is composed of 64 analog in-memory compute cores (or tiles), each of which contains 256-by-256 crossbar array of synaptic unit cells. Compact, time-based analog-to-digital converters are integrated in each tile to transition between the analog and digital worlds. Each tile is also integrated with lightweight digital processing units that perform simple nonlinear neuronal activation functions and scaling operations.

Using the chip, IBM researchers performed the most study of compute precision of analog in-memory computing and demonstrated an accuracy of 92.81% on the CIFAR-10 image dataset. We believe this to be the highest level of accuracy of any currently reported chips using similar technology. In the paper, we also showed how we can seamlessly combine analog in-memory computing with several digital processing units and a digital communication fabric. The measured throughput per area for 8-bit input-output matrix multiplications of 400 GOPS/mm2 of the chip is more than 15 times higher than previous multi-core, in-memory computing chips based on resistive memory, while achieving comparable energy efficiency.

In conjunction with sophisticated hardware-aware training IBM have developed in recent years, The company expects these accelerators to deliver software-equivalent neural network accuracies across a wide variety of models in the years ahead.