Canada’s competitiveness and its ability to develop the workforce it needs in sectors such as life sciences, financial services, advanced materials, aerospace, automotive, and energy rely on a robust digital research infrastructure. Fortunately, Canada has a solid base, both organisationally and technically, that can support the strong growth planned in this area for the next few years. That base is Compute Canada.

In its document, Seizing Canada’s Moment Moving Forward in Science, Technology and Innovation 2014, the Government of Canada acknowledged that advanced research computing (ARC) underpins the prosperity of our nation and is a major ingredient in the recipe that will transform Canada from a resource-based to a knowledge-based economy.

Compute Canada is the national organisation that provides and oversees Canada’s advanced research computing resources, including big data analysis, visualisation, data storage, software, portals and platforms for research computing at most of its academic and research institutes. It is a national federation of advanced research computing service providers, funded by the federal government, provincial governments, and 34 of Canada’s most research-intensive universities and research hospitals. This federated model delivers the majority of the large-scale research computing capacity in Canada and serves more than 9,000 researchers across the country.

Investing in the future

Keeping up with demand for compute cycles and data storage is a challenge in every country. Until recently, capital funding in Canada for resources that could be used across Canada had been limited, and Compute Canada has struggled to keep an ageing platform running. However, several recent announcements have confirmed Canada’s commitment to digital research infrastructure by government and national funding agencies. These announcements will enable Compute Canada to increase compute and storage capabilities by a factor of five or more over the next two years, with further investments expected in later years.

These investments underscore the importance of this infrastructure and the effectiveness of Compute Canada as a steward of the national ARC platform. Compute Canada’s federated model combines the resources of several layers of governance across the country.

Bringing all of its compute services and storage capabilities under one national federation allows it to concentrate and consolidate their investments and to provide world-class services to Canada’s leading researchers. It has made us much more nimble.

Currently, Compute Canada supports more than 2,700 research teams using its systems – that means more than 9,000 researchers, as well as their international and industrial partners, use its resources. With its regional partners – ACENET, Calcul Quebec, Compute Ontario, and WestGrid – each responsible for one large region across Canada, Compute Canada is leading the broad transformation of the country’s advanced research computing platform, replacing many older compute and storage systems with new facilities.

Refreshing the national high-performance computing platform

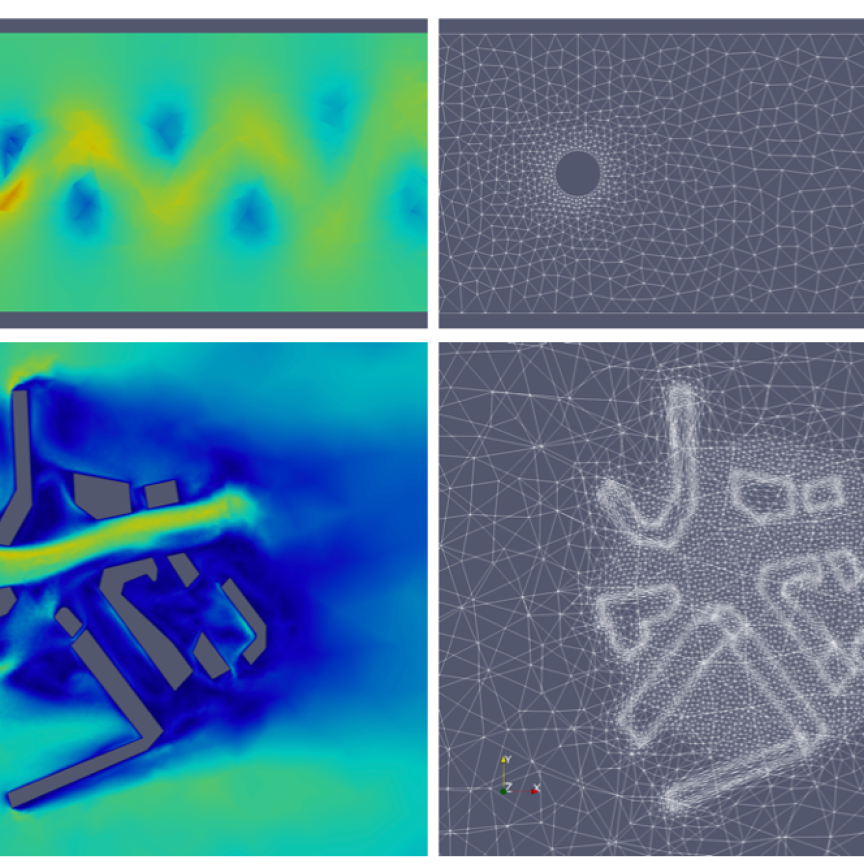

As my colleague Dugan O’Neil, Compute Canada’s chief science officer, says, on the pure compute side, everyone is challenged by the scale that’s needed. In his view, we need to provide bigger and bigger systems. We want our scientists doing atmospheric modelling, or the modelling of stars or the ocean – which requires enormous compute power – to be competitive with the other people in the world who are doing similar work. Atmospheric simulations used to be performed using a 3D grid with cells 500 km by 500 km covering the surface of the earth. Now, simulations might be used with 5 km by 5 km grids. A rule of thumb is that a factor of two times improvement in resolution leads to roughly a 10-time increase in computing need.

Compute Canada is responding to these demands by leading the largest refresh of the national ARC platform in its history, dedicating roughly $75 million (Cdn) to the acquisition of three new general-purpose systems that will become operational in 2016, and a fourth, highly parallel system, to be commissioned in 2017. These new systems will be larger and more capable than the older systems they replace, and will provide operational cost savings and economies of scale. These systems are expected to increase Compute Canada’s computational capacity by more than 12 petaflops. They will be installed in four of the most sophisticated, purpose-built university data centres in Canada, and will support a range of national services that can be accessed by any Canadian researcher from any institution in any discipline, just by registering as a user with Compute Canada.

A commitment to on-campus support

Despite the concentration of new investment in just a few locations, local support will remain a hallmark of Canada’s advanced research computing services at all of Compute Canada’s member institutions. Consolidation of systems yields larger and more capable systems, while distributed support yields better capabilities for researchers to do their work on their own campuses.

Compute Canada combines the expertise of more than 200 technical experts employed by member institutions across Canada. By pooling their collective expertise, Compute Canada is moving towards a model where the most capable personnel from across all its member institutions can work together as national teams. This is necessary to run the consolidated systems effectively because they will have a broader mandate and users will have higher service expectations.

Creating a seamless national storage infrastructure

As Dugan O’Neil points out, many organisations around the world are facing similar challenges right now. The growing volume of data that we manage is a challenge. One aspect of this challenge is to describe and manage that data properly. Researchers not only have to be able to find the data they have stored, but they have to describe it well enough so that other researchers can find and use that data. We see our colleagues in the USA, Australia, and Europe working on similar issues and we need to work together on interoperable solutions”

Compute Canada is responding to this challenge by creating a national storage infrastructure, with the goal of deploying almost 60 petabytes of new storage capacity. Seamless access to data resources will facilitate more complex and robust workflows, while making it easier to share data without unnecessary duplication. This new storage infrastructure will have the capacity and features needed for the diverse user base. Features include data isolation for multiple tenants of data resources, object storage access mechanisms, geo-replication and backups of datasets and hierarchical storage management, which will appear to most users as unlimited capacity.

New services, new approaches

Compute Canada will also grow its cloud deployment and integrate cloud services, including storage and job workflows with larger clusters. As a result, cloud-style computation will be side-by-side with large parallel batch-style computation, often on the same systems. Compute Canada is making technology choices to make it easier for researchers to have the best mix of systems and services for their work. This includes widespread use of the OpenStack cloud computing platform, object storage, cross-cluster scheduling of jobs, and flexible mechanisms for provisioning and managing systems and services.

Cloud services will provide the customised virtual machines needed by some researchers. This is key for disciplines and research teams with highly customised environments that might otherwise be difficult to deploy to shared resources. Cloud services will also host high-throughput workloads, long-term web and database services for gateways and portals, and data or services requiring isolation, such as personal health data.

One of Compute Canada’s earliest general-purpose acquisitions in 2016 will be for a roughly 6,000-core cloud system to be deployed at the University of Victoria. Additional cloud-based resources are planned in subsequent years and Compute Canada is working with several of its member institutions, which are planning their own ‘cloud’ investments, to align architectures so workloads can migrate seamlessly to federated systems when needed. Compute Canada is at the leading edge of integrating cloud computing in its mix of ARC resources and making it easier for researcher workflows to span a mix of cloud, cluster, and large parallel systems.

The road ahead

The first step into the future will involve Compute Canada beginning its latest national consolidation, replacing most of its oldest system with four mega-systems with much more functionality. Greg Newby, Compute Canada’s chief technology officer, takes the view that this is very good news that is enviable internationally. We’re going from being behind to modernising, with sufficient technical and financial resources. It’s a big consolidation. We’re going to buy bigger systems and place them at fewer places. It’s a sign that we’re working together nationally as opposed to everyone being out for themselves. Larger systems in more centralised locations is the trend.

In my own view, Compute Canada’s mandate distinguishes it. We have the ability to plan on a national scale. The scope of our operations and our formal accountability to a broad range of institutional stakeholders give us an important role in Canada. Not only do we provide responsive, cost-effective services to our users, but we can also act as advocate for the investments that are needed by that community. Canada’s government and our funding agencies understand that we speak for the community and can deliver what we promise.

Accelerating access to genomic information

McGill University’s Dr Guillaume Bourque and Sherbrooke University’s Dr Pierre-Etienne Jacques and their team of researchers have created GenAP, a tool that enables Canadian researchers to access and use genomic data more easily, to advance knowledge of human health and disease.

Data from human genomes is a rich resource for researchers in many fields of human health, but the size of the datasets can be daunting. Estimates suggest that fast machines for human-genome sequencing will be capable of producing 85 petabytes of data this year worldwide.

GenAP, a software platform that, through a simple point-and-click web interface, enables researchers to access genome datasets and send them for processing at Compute Canada’s high-performance computing facilities across Canada, solves that problem. Simply put, the software lets more researchers conduct more analysis, which accelerates the new understanding about diseases and their potential treatments.

‘Providing a wide range of researchers and clinicians with access to this data creates a new playing field and strengthens the team that is investigating the genomic factors impacting disease,’ Dr Bourque said at the time of GenAP’s release. ‘GenAP has the potential to accelerate the interpretation of genetic information into knowledge that can be applied in diagnosing, treating and preventing disease.’

A global tool to measure climate change

Climate change is affecting countries all over the world and understanding atmospheric composition and its effects on air quality and climate is essential to mitigating the problem.

Professor Randall Martin is the director of the Atmospheric Composition Analysis Group at Dalhousie University, and is a research associate at the Harvard-Smithsonian Center for Astrophysics. His research focuses on characterising atmospheric composition to inform effective policies surrounding major environmental and public health challenges, ranging from air quality to climate change. He leads a research group at the interface of satellite remote sensing and global modelling, with applications that include population exposure for health studies, top-down constraints on emissions and analysis of processes that affect atmospheric composition. This group’s work has been honoured as Paper of the Year by the leading journal Environmental Health Perspectives.

‘Compute Canada resources are critical for our work,’ Dr Martin says. ‘Our research requires intense calculations and vast data storage to conduct satellite retrievals of atmospheric composition and to simulate the multitude of processes affecting atmospheric composition, both of which are needed to inform policy development for air quality and climate change.’

Beyond the Milky Way

University of Victoria’s Christopher Pritchet heads up the Canadian Advanced Network for Astronomical Research (CANFAR), an operational research portal that facilitates the delivery, processing, storage, analysis, and distribution of large astronomical datasets. An innovative but challenging new feature of the research portal is the operation of services that channel the deluge of telescope data through Canadian networks to the computational grid and data grid infrastructure.

Dr Pritchet’s portal is used by many Canadian astronomy projects employing data generated by peer-reviewed allocations of observation time using three of Canada’s telescopes: the Canada-France-Hawaii Telescope, the James Clerk Maxwell Telescope and the Herschel Space Observatory, as well as data from other tools, such as the Hubble Space Telescope.