With a 425 per cent increase in the number of research projects in the past 10 years, the staff running the HPC facility at the University at Southampton choose to simplify the node architecture to better serve a growing community of both new and experienced HPC users.

Researchers from across the University of Southampton can now benefit from a new high performance computing (HPC) machine named Iridis 5. The new 1,300 Teraflop system was designed, integrated and configured by high performance compute, storage and data analytics integrator, OCF, and will support research demanding traditional HPC as well as projects requiring large-scale deep storage, big data analytics, web platforms for bioinformatics, and AI services.

‘Iridis 4 was based On Sandy Bridge and the current one we have got now is based on Sky Lake so you can see we have jumped four generations of development. Four years is a long time in HPC performance,’ stated Oz Parchment, director of i-solutions at the University of Southampton.

‘We are quite packed in terms of our users so were running pretty much constantly above 90 per cent utilisation. For us having a bigger system is about improving the throughput for our community’ Parchment added.

The new system Iridis 5 – four times more powerful than the University’s previous HPC system – is comprised of more than 20,000 Intel Skylake cores on a next generation Lenovo ThinkSystem SD530 server – the first UK installation of the hardware. In addition to the CPUs, it is using 10 Gigabyte servers containing 40 NVIDIA GTX 1080 Ti GPUs for projects that require high, single precision performance, and OCF has committed to the delivery of 20 Volta GPUs when they become available.

OCF’s xCAT-based software is used to manage the main HPC resources, with Bright Computing’s Advanced Linux Cluster Management software providing the research cloud and data analytics portions of the system.

‘In this case, there are really two main drivers for the new system. One is just the general growth of computer modelling in science and engineering, our business schools, economics department, geography and psychology users are all experiencing growth in the use of computational tools and computational modelling’ said Parchment.

‘The complexity of these models means that the only way to solve them is on large-scale supercomputers and so we not only have the traditional users, engineers, physicists, chemists but now we have a new generation of researchers from biology and these other areas that are looking at using large-scale systems’ added Parchment.

Syma Khalid, professor of computational biophysics at the University of Southampton, stated: ‘Our research focuses on understanding how biological membranes function – we use HPC to develop models to predict how membranes protect bacteria. The new insights we gain from our HPC studies have the potential to inform the development of novel antibiotics. We’ve had early access to Iridis 5 and it’s substantially bigger and faster than its previous iteration – it’s well ahead of any other in use at any university across the UK for the types of calculations we’re doing.’

Managing diversity

With this increasingly broad and diverse portfolio of users Parchment and his colleagues must not only provide computing services but also try to teach users about the best way to access and derive value from the HPC system.

‘Even with the traditional usage model we get about 30 new projects starting every month and there will be a small proportion of experienced users and a larger proportion of undergraduates transitioning to post-graduate studies and they might never have touched a supercomputer before,’ said Parchment.

‘In some ways, although the field might be traditional and might have a very long history with HPC, the people that turn up don’t necessarily have that. We have to do some quite basic introductions to Linux, cluster computing or supercomputing. These are mainly online training courses that we push out to those people and sometimes face to face interactions if their challenges are particularly complicated,’ added Parchment.

‘We run the whole gamut of users from novice to very experienced users that have quite complex challenges and problems. Being able to service that kind of diversity is incredibly difficult’ Parchment concluded.

Simplifying the supercomputer

In order to help these new and inexperienced users to access the system, the node architecture has been simplified to have the same memory and core count on each node. The previous supercomputer was been configured with several partitions of different hardware and memory.

The aim is to provide a more simple system for users to access as there have been issues with people placing jobs on hardware that would be sub-optimal for the project.

‘It is difficult to provide an environment that engages not just at the novice level but also at the advanced user level. You have got to ensure that on one hand you try and provide a simple environment that normally means you are restricting what people can do, whereas an advanced user will require a much more customisable environment. Providing the two of them is an issue of ongoing debate in the team’ stated Parchment.

One of the things that the team has worked on with the introduction of Iridis 5 is helping to add value to the user community rather than just providing a supercomputer. This means thinking about the entire workflow and not just computational elements. An HPC system is a bit like a scientific instrument in that you run your experiment ion the instrument and you get data. And that is the end of the HPC component in some ways – certainly, this was the case with our previous perspective,’ explained Parchment.

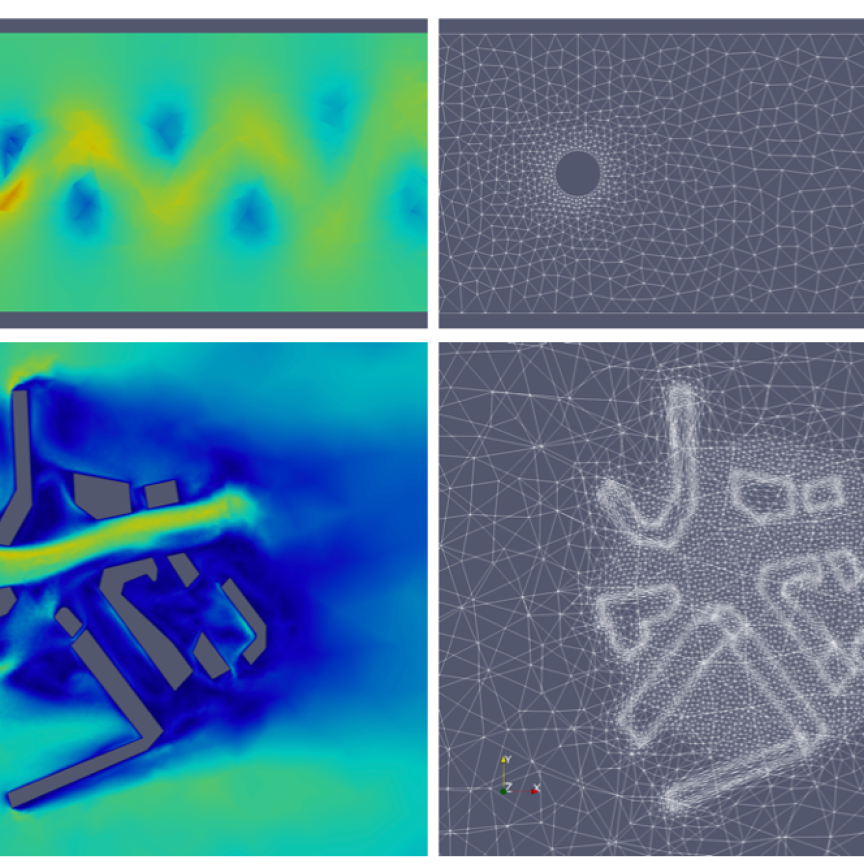

‘Visualisation has now been put into the system, data analytics is a component, cloud is a component so we are thinking a little bit more about the workflow that people are using. It is not just about the scientific instrument generating the data but how can we help the researcher to gain insight into the system,’ added Parchment.

‘Clearly what we do not want is the researcher trying to move terabytes of data from that instrument to their desktop so they can analyse it.’

In addition to removing partitions, there have also been some surreptitious improvements that have allowed the Southampton facility to better serve a more varied user community as they have jumped from an old to a new system bridging four generations of Intel’s CPU microarchitecture.

The nodes used in Iridis 5 use 192 GB per node which Parchment noted was ‘quite a lot’ for the average user. While this is split among 40 processor cores it is now getting to a stage where applications that do not scale particularly well are only using two to three nodes or 120 cores.

Ultimately applications will decide the need for various hardware partitions and some HPC centres are focused specifically on testing new architectures. However, the decision to standardise the node architecture for a ‘one size fits all’ approach could be useful to other centres – such as university clusters with a varied user community.

‘What we have been able to do with the march of technology is to ride that wave a little bit. Memory per node is not much of an issue because it is actually quite large now by default. You can cope reasonably well with quite a wide spectrum of codes without having to do unnatural acts around partitioning up your system for specific application spaces which makes it complicated for everybody,’ said Parchment.

The team has tried to keep the system as simple as possible to give the user community a much clearer understanding of what they have got to work with. ‘That determinism can be quite useful for a researcher to work with without having to make too many decisions on what to use,’ added Parchment.

‘We really are trying to get to the point where we are adding value to what the researcher does rather than just providing a facility and saying “here you go” and we will come back to you in three or four years’ time with a new one. We want to make our users more productive,’ Parchment concluded.